Nearly one in four women who have breast cancer and opt for a breast-saving lumpectomy will need a second surgery, increasing the cost of medical treatment and the risk of complications.

Surgeons freeze and examine surgically removed tissue to determine whether any cancer cells remain in the margin of tissue surrounding the excised tumor. But the accuracy of this approach is limited, and results are not available for days.

What if surgeons had a more accurate way to find out—in real time in the operating room—whether the tumor margins were free of cancer cells?

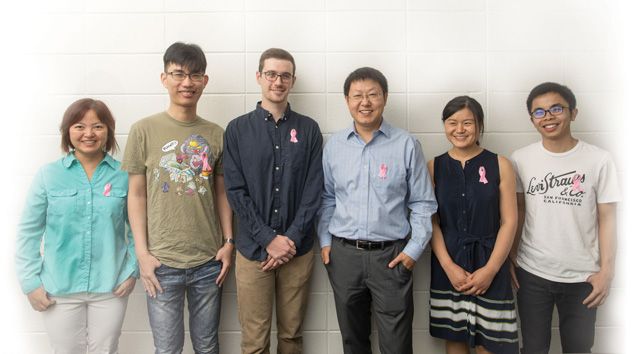

Chao Zhou, assistant professor of electrical engineering, and Sharon Xiaolei Huang, associate professor of computer science and engineering, have joined forces to develop a technique that can detect, in real-time, the difference between cancerous and benign cells.

In a recent article published in the journal Medical Image Analysis, the team reported that their technique correctly identified benign versus cancerous cells more than 90 percent of the time. The team includes Lehigh graduate students Sunhua Wan and Ting Xu of computer science and engineering, and Tao Xu of electrical and computer engineering, along with researchers from MIT, Harvard Medical School, and Zhengzhou University.

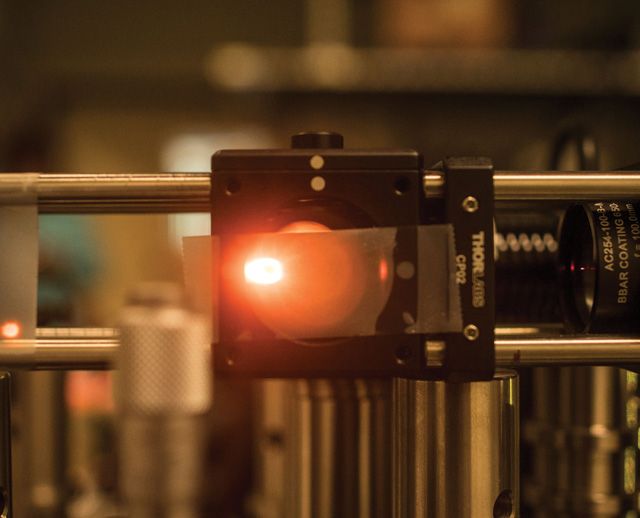

Zhou, whose work focuses on improving biomedical imaging techniques, is a pioneer in the use of optical coherence microscopy (OCM), a noninvasive imaging method that can provide 3D, high-resolution images of biological tissue at the cellular level. Huang, an expert in training computers to recognize visual images, identifies the best way to analyze OCM images to differentiate between benign and cancerous tissue.

“The process takes a large number of images, and labels the types of tissue in the sample,” says Huang. “For every pixel in an image, we know whether it is fat, carcinoma or another cell type. In addition, we extract thousands of different features that can be present in the image, such as texture, color or local contrast, and we use a machine learning algorithm to select which features are the most discriminating.”

After examining multiple types of texture features, Huang and Zhou determined that Local Binary Pattern (LBP) features—visual descriptors that compare the intensity of a center pixel with those of its neighbors—worked best for classifying tissues imaged by OCM.

The team also integrated two other features. The Average Local Binary Pattern (ALBP) compares the intensity value of each neighbor pixel with the average intensity value of all neighbors. The Block-Based Local Binary Pattern (BLBP) compares the average intensity value of pixels in blocks of a certain shape in a neighborhood around the center pixel.

Experiments showed that by integrating a selected set of these features at multiple scales, the classification accuracy increased from 81.7 percent to 93.8 percent, along with high sensitivity—100 percent—and specificity—85.2 percent—for cancer detection using the OCM images.