“The stochastic gradient method is the workhorse of machine learning—and thus most of artificial intelligence,” says Luis Nunes Vicente, Timothy J. Wilmott Endowed Chair Professor and Chair of the Department of Industrial and Systems Engineering in Lehigh University’s P.C. Rossin College of Engineering and Applied Science.

The stochastic gradient method is an optimization algorithm that essentially fine-tunes models used in large-scale applications of machine learning (ML), whether that’s a streaming service suggesting what movie to watch next, or a credit card company monitoring online transactions to detect fraud.

You might call stochastic gradient the secret sauce of machine learning. And the “recipe,” says Luis Nunes Vicente, has a not-so-well-known Lehigh connection.

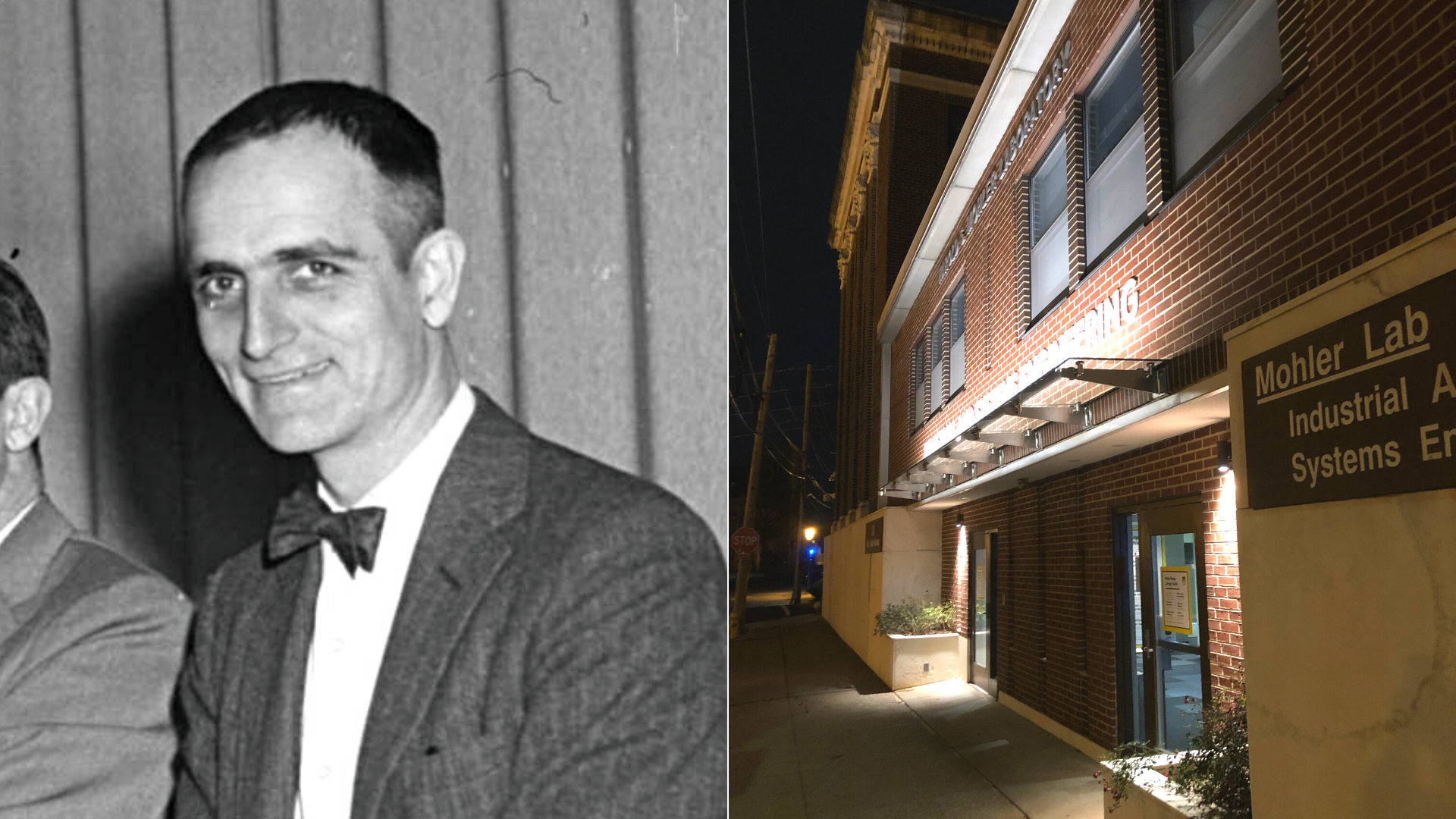

The main idea of the stochastic gradient method was derived in a seminal 1951 paper published in The Annals of Mathematical Statistics by University of North Carolina mathematician Herbert Robbins and his graduate student Sutton Monro. A few years later, Monro would join the Lehigh ISE faculty and go on to teach industrial engineering for 26 years, retiring in 1985.

This outstanding contribution is so foundational to current machine learning applications and research—Luis Nunes Vicente compares it to Louis Pasteur and vaccines—that it is underappreciated. If crediting it properly weren’t generally overlooked by the ML field, the department chair says, the paper would likely be among the most highly cited scientific works ever. Still, he says, the paper has been cited more than 10,000 times.

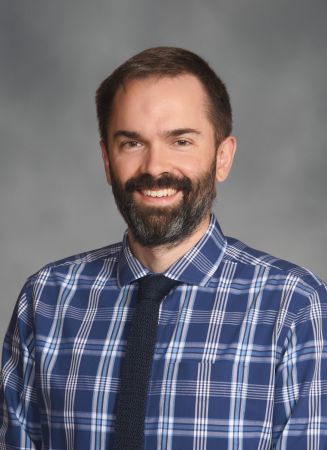

Today, another Lehigh ISE professor, Frank E. Curtis, an expert in continuous mathematical optimization, is leading the charge to modernize and advance the use of the stochastic gradient method in machine learning, with support from a new NSF grant. And his colleague Sihong Xie in the Department of Computer Science and Engineering is one of many Rossin College researchers applying the stochastic gradient technique in their groundbreaking work.

Improving predictive accuracy

In basic terms, a machine learning algorithm works by creating a model that learns from the historical data it’s been fed to make predictions about new data it receives. But the process usually goes very poorly in the beginning, which is to be expected, says Curtis, who is a founding member of the OptML (Optimization and Machine Learning) Research Group at Lehigh.

For example, an image classification algorithm might initially misinterpret a picture of a cat as a dog. The typical solution, Curtis says, is to feed the machine more and more data so the algorithm can update its model in a way that it can make better predictions.

In the field’s parlance, machines learn through what’s called a “loss function,” which measures the predictive accuracy of a model for a given set of data. If predictions deviate too much from actual results, the loss function is represented mathematically as a very large number.

The job of the data scientist is to minimize the loss function—i.e., to reduce the degree of error in those predictions. That’s what “optimization” means in the context of ML, explains Curtis, and it’s critical to advancing the technology that is driving innovation in areas such as medical diagnostics, autonomous vehicles, and speech recognition, just to name a few.

With the stochastic gradient method (the approach pioneered by Robbins and Monro), the loss function used by the algorithm acts as a kind of barometer, gauging accuracy with each iteration of updates to its model, and continually adjusting it to yield the smallest possible error.

In the image classification example, it would be a very slow process for a computer to go through all the images in a data set, Curtis says, and then update its model (a full-on “wash, rinse, repeat” approach).

The magic of the Robbins-Monro algorithm, he says, is that it employs a sampling method.

“What that means is,” Curtis says, “rather than going through all the data first and then updating its model, the algorithm randomly chooses a small bit of it and uses that to update it; and then it grabs another random bit of data, and does it over and over again until it has gone through all the data.”

By using a stochastic gradient method, the machine ends up processing the same amount of data as other methods, but many more updates of the model that have led to improvements in its predictive accuracy will have been done. Essentially, you get a better result more quickly with the same amount of computation.

Increasing transparency in decision-making

In machine learning, “these optimization algorithms are becoming more and more important, for two reasons,” says Sihong Xie, an assistant professor of computer science and engineering. “One is that, as the data sets become larger and larger, you cannot process all the data at the same time; and the second reason is that we can formulate an optimization problem that will analyze why the machine learning algorithm makes a particular decision.”

The latter is particularly important when applying machine learning to make decisions involving human users. Xie and his research group are investigating the transparency of machine learning models, because, as he explains, despite all of the advances driven by the technology in recent years, there are still significant open questions to be addressed.

One of them is that the algorithms that can learn from data and make predictions are still somewhat of a black box to the end user.

For example, imagine that you and a colleague are on a social network of job-seekers, akin to LinkedIn. You’re both in the same field, and equally qualified, but as you discuss your prospects over a cup of coffee, it’s clear that your friend has been seeing more high-quality job postings than you have.

It makes you wonder: What information did the site’s algorithm use to generate the recommendations in the first place?

And are you not seeing some postings because of your age or your gender or something in your past experience? If so, the algorithm that produced the recommendations is suboptimal because its results are unfairly discriminatory, says Xie.

In late 2021, Xie’s PhD students Jiaxin Liu and Chao Chen presented the results of their work at two renowned meetings on information retrieval and data mining (Liu, at the ACM International Conference on Information and Knowledge Management, and Chen, at the IEEE International Conference on Data Mining).

The team found that the current state-of-the-art model, known as a “graph neural network,” can actually exacerbate bias in the data that it uses in its decisions. In response, they developed an optimization algorithm using the stochastic gradient method—Lehigh ISE Professor Sutton Monro’s contribution once again—that could find optimal trade-offs among competing fairness goals that would allow domain experts to select a trade-off that is least harmful to all subpopulations.

For example, the algorithm could be used to help ensure that selection for a specific job was unaffected by the applicant’s sex while potentially still allowing the company’s overall hiring rate to vary by sex if, say, women applicants tended to apply for more competitive jobs.

The team’s work on explainable graph neural networks adopted the stochastic gradient method to find human-friendly explanations of why the machine learning model makes favorable or unfavorable decisions over different subpopulations.

Designing ‘adaptive algorithms’

While Xie and his team continue to work on fair and transparent algorithms for the populations impacted by them, Curtis and his team are working on making those same algorithms more efficient for the companies, governments, and institutions that use them.

Curtis, who was a recipient of the 2021 Lagrange Prize in Continuous Optimization, one of the field’s top honors, will continue his work supported by a new $250,000 grant from the National Science Foundation. The project will develop modern improvements to the stochastic gradient method.

According to the project description, “despite the successes of certain optimization techniques, large-scale learning remains extremely expensive in terms of time and energy, which puts the ability to train machines to perform certain fundamental tasks exclusively in the hands of those with access to extreme-scale supercomputing facilities.”

“One downside of the stochastic gradient method is that it requires a lot of ‘tuning,’” Curtis says, “which means that, for example, Google may need to run the algorithm a very large number of times with different parameter settings in order to find a setting in which the algorithm actually gives a good result.”

This tuning can essentially waste hours, weeks—even months—of computation, which translates into a lot of wasted electrical power. It’s one of the reasons that only the big internet companies can afford to train very large-scale models for complicated tasks.

“For our project,” Curtis says, “we are designing ‘adaptive’ algorithms that adjust their parameter settings during the training process, so that they might be able to offer equally good solutions, but without all the wasted effort for tuning.”

—Story by Steve Neumann