From mapping sunspots to mapping genomes to optimizing search engines, high-performance computing is uniquely positioned to illuminate the inner workings of natural phenomena and of human endeavors.

High-performance computing makes it possible to take systems more complex than we can imagine, model them mathematically, and analyze or improve these systems, often by solving countless equations in a second’s time.

HPC, as it’s called, lets car manufacturers run crash tests virtually, reliably and cheaply. It helps biologists simulate the activities of a cell and it enables physicists to model the flows of plasma in a nuclear fusion reactor. UPS and FedEx use HPC to select the best way, among millions of options, of routing thousands of drivers to their destinations.

HPC also brings rocket science to everyday life. One manufacturer of household appliances upgraded its computer cluster to solve the intertwined demands of product safety, supply chain management and protective packaging. A coffee maker used finite element analysis to model and solve problems caused by gas buildup when it switched from metal to plastic containers.

Because of its very nature, says Ted Ralphs, associate professor of industrial and systems engineering, HPC is becoming more accessible. HPC tackles a large task by dividing it into subtasks and assigning these to processors that work in parallel to solve them. As these processors grow smaller and more affordable, says Ralphs, every workstation and desktop PC becomes a potential contributor to an HPC infrastructure.

“One big trend in HPC is that hardware is becoming more commoditized,” says Ralphs, who led Lehigh’s HPC steering committee for eight years. “It used to be that your PC was good only for basic functions and that you had to switch to a large machine to do a big job.

“Today, you can buy a group of PCs off the shelf, link them with a fast network connection and do perfectly acceptable parallel computing. Special processors are required less and less. Off-the-shelf equipment has enough power and memory for many tasks.”

Lehigh in the past four years has greatly expanded its HPC facilities with half a dozen strategic purchases of computer clusters, workstations and storage hardware. The university has also installed the Condor Project, which marshals all campus computing power – HPC facilities as well as the capacity of several thousand PCs in Lehigh’s public labs when those PCs are idle – to run large tasks.

“Condor is in constant communication with the computers on campus,” says Ralphs. “It identifies machines with capacity that’s not being used, and sends tasks to them. Not many campuses have this level of opportunistic computing that taps into commodity hardware.”

Computing and HPC underlie most of Lehigh’s major research efforts. Mathematicians use HPC to search for strange number pairs for security codes. Electrical engineers investigate signal processing as well as the energy costs associated with data warehousing. Biologists and bioengineers model the behavior of molecules, and geologists project climate change patterns.

Computer scientists use HPC to investigate computer-vision and pattern-recognition technologies. Mechanical engineers and physicists model the dynamic flow of fluids, including the plasma in nuclear fusion reactors. Physicists run numerical simulations to calculate the atomic structures and vibrational properties of material defects in semiconductors.

Lehigh will examine the state of the art in HPC when it hosts a workshop Oct. 5–6 titled “Computational Engineering & Science/HPC: Enabling New Discoveries.”

The following articles showcase some of the uses HPC has found at Lehigh.

Dividing and conquering

Optimization problems, says Ted Ralphs, are tailor-made for HPC. Take the routing of delivery trucks. You must evaluate thousands of possible ways of assigning 100 drivers each to deliver 25 packages and identify the one solution that requires the fewest driver-miles.

“An optimization problem lends itself to a divide-and-conquer approach,” says Ralphs. “You divide a set of problems into portions and mathematically prove that certain portions will or will not yield useful information. This is a naturally parallelizable process because you give each portion of a problem to a different processor.”

Parallel processing, says Ralphs, thrives when all processors are busy all of the time doing productive work. Avoiding “down time,” however, is challenging when using a large number of processors. You do not know in advance how much work each portion of your problem will require. If half the portions are solved quickly, the computing capacity assigned to them will sit idle while the other portions are being solved.

“Idle time,” says Ralphs, “means your computing capacity is not paying its way.”

The answer, says Ralphs, is to shift data dynamically to underutilized processors in order to achieve efficiency. This becomes more difficult the more processors you use to solve a problem, and it makes demands on speed and bandwidth. Data must be shifted constantly, especially while solving a complicated problem. And this data management must not be allowed to consume computing resources and undermine efficiency.

Ralphs tackles these challenges by writing “scalable” algorithms that determine how to move data around so each processor is always doing something useful to contribute to the overall computation.

“My goal is to write one algorithm with many procedures that covers the entire process no matter how many processors I’m using,” he says. “I want one strategy that can be automated. If my method of shifting data changes because of the number of processors I’m using, this change should happen automatically.”

Ralphs once wrote software to manage a task that required 2,000 processors. But scale is not his primary goal. Ralphs runs Computational Infrastructure for Operations Research, or COIN-OR, a repository of open-source software tools for optimization problems. People around the world have used his tools, and Ralphs takes delight in learning how his programs are applied. One of his favorite emails came from a man who said COIN-OR’s optimization tools had helped overcome a water-delivery challenge in Africa.

“I develop fundamental tools and see what people do with them,” Ralphs says. “I’m happy when I can produce something that helps someone solve a problem.”

When time scales conflict

Life for atoms can be a contradiction in time scales. Take ceramic powders, for example. Scientists estimate their atoms vibrate as many as 1014 times per second, or one million times one million times one hundred.

When the powders are heated, or sintered, to form a solid material, says Jeff Rickman, a second, more leisurely motion results. Every 10,000 atomic vibrations or so, an atom hops from one location to another in the crystal lattice.

This hopping constitutes a phenomenon called diffusion, in which a material’s molecules intermingle by randomly migrating from a region of higher concentration to one of lower concentration.

The difference between these two time scales, between fast and incomprehensibly fast, is of great consequence to Rickman, a professor of materials science and engineering who uses HPC to build computational models of diffusion.

Rickman studies the diffusion of aluminum and oxygen ions in aluminum-oxide (alumina), which is used in the manufacture of aluminum and in advanced ceramics, catalysts, tools and engine parts. Diffusion plays a role in creep, in which a solid material deforms because of low-level stresses.

Rickman’s goal is to learn how a tiny amount of an impurity can alter diffusion and other transport properties. He has conducted tensile loading experiments to examine creep and oxidation, and he is constructing computational models to learn how impurities affect diffusion.

Because the ions in alumina are coupled, Rickman must write equations of motion for all the pairs in a system and solve the equations together. “Everything in this system is interconnected,” he says. “Each ion exerts force on its neighbor. If one moves, both are affected.”

Rickman’s equations must also take into account the vastly different speeds at which atoms hop and vibrate.

“Diffusion is a slow process compared with the vibrations of atoms. We are interested in the atoms that are hopping but we must also watch the atoms that are shaking. To integrate the equations, we have to bridge time scales. To solve the equations, we have to follow the fastest thing happening even if we’re not interested in it.

“And because we’re studying transport over distance, we have to wait for many hops to occur before we can make a meaningful calculation about diffusion.”

Rickman writes parallel codes to simulate the phenomena in the system he is studying. “We’re looking at a relatively large system. This implies the need to subdivide the system into parts, to use different processors and to do this in such a way that processes occurring almost independently can be modeled almost in parallel.”

Rickman uses Lehigh’s HPC facilities as well as those at the Pittsburgh Supercomputing Center. He collaborates with Helen Chan and Martin Harmer, professors of materials science and engineering. The group receives funding from the Office of Naval Research.

Fatigued cantilevers

Take a second look at the cantilevered traffic signals and highway signs that you see everywhere on roads and freeways. The welded connections that support these structures are vulnerable to fatigue cracking from the cumulative effects of winds and breezes. The issue has become urgent in the U.S., especially in the West and Midwest, where signs have fallen and structures have collapsed.

Lehigh’s ATLSS (Advanced Technology for Large Structural Systems) Center is combining HPC with full-scale lab tests to develop specifications for the design and fabrication of new sign and mast structures and for the retrofitting of existing structures. The four-year project is funded by the American Association of State Highway and Transportation Officials and the Federal Highway Administration.

The ATLSS group is conducting 100 to 110 tests on 80 structures. The group has also conducted simulations of 18,000 mathematical models of the structures and the welded connections using ABAQUS, a suite of finite-element analysis software. Each model contains about 40,000 degrees of freedom, a term that refers to the number of equations that must be solved at each iteration.

The project makes use of two HPC architectures in Lehigh’s Computing Center. The SMP (Symmetrical MultiProcessor) computing facility contains a large number of processors and a shared memory in a single machine. The 40-machine Beowulf cluster enables parallel processing of full-scale structural systems by parceling parts of one large analysis out to many different machines.

The lab tests and computational simulations complement each other, says ATLSS senior research scientist Sougata Roy, who oversees the project with ATLSS director Richard Sause.

“Because lab tests are expensive and time-consuming, you can do only a limited number of them. Simulation enables you to extend your findings, but it goes only so far. Even the most sophisticated calculations will not produce the right result until you can confirm your mathematical model with experimental results.

“You can then use mathematical modeling to project the results of lab tests to a similar, but distinct, set of tests. As more experimental data comes in, you refine your model to improve its predictions.”

Advances in computational capabilities in the last 15 years or so have enabled engineers to analyze and simulate more realistically the response of an entire structure and its design to different types of failure modes.

“Our goal,” says Roy, “is to define the infinite life threshold of the variation of the critical connections in these structures so that we know that no fatigue-induced failure will occur during their design lifetime. This will enable future standards to specify how many cycles a structure can sustain.”

To shade or to shine

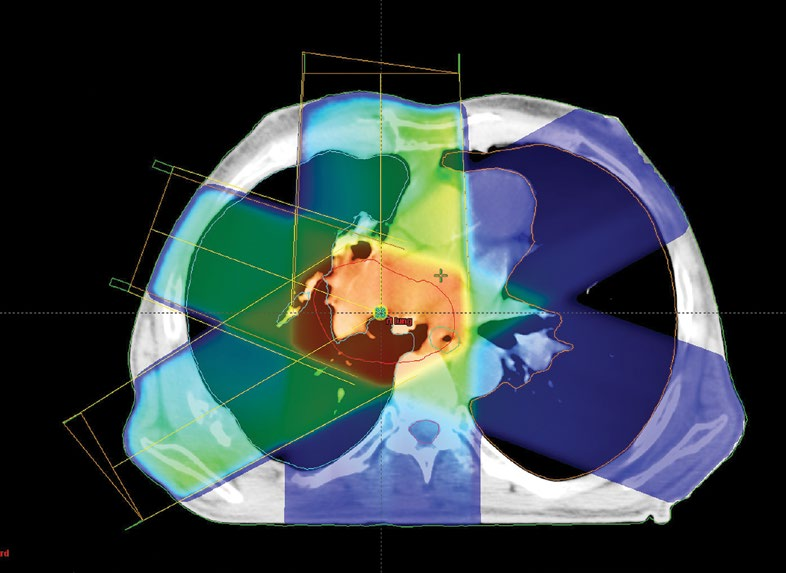

HPC has long played a role in the imaging of tumors, says Tamas Terlaky, but it has not yet reached its full potential.

“Massively parallel computing technology gives us an optimal image of the tumor and the healthy organs alongside it,” says Terlaky, the chair of the department of industrial and systems engineering. “Once you have this image, you have to decide how to radiate the tumor. Twenty years ago, radiologists used a large gamma ray beam that revolved around the body, radiating from different angles. But this was a uniform beam that often burned healthy tissue and tumor alike.”

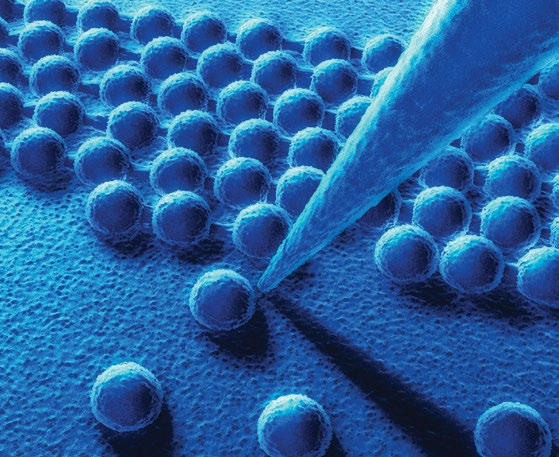

Advances in computational modeling enable today’s radiologists to limit this damage by modulating the intensity of the beam as it moves in an arc around the tumor. This Intensity-Modulated Radiation Therapy (IMRT) is achieved by focusing the beam through a grid of pinholes as small as 1 mm across that can be shaded. Radiologists and medical physicists solve linear equations to determine which holes should be shaded, and by how much, at a large number of positions along the arc.

“You need to decide where to shade and where to shine from each position as the beam moves around,” says Terlaky. “This involves millions of variables and is far beyond what a medical doctor by intuition can do. You need a mathematical model capable of solving millions of equations to calculate the optimal pattern of radiation.”

This model must also overcome the uncertainties inherent in radiation therapy, says Terlaky. As your tumor is being radiated, for example, you are breathing and your body is moving. And the image on which your mathematical model is based is no longer accurate, as you will have gained or lost weight since it was taken.

To develop models robust enough to deal with these uncertainties, Terlaky uses SeDuMi (SelfDualMinimization), a software package that solves optimization problems over symmetric cones and can link the linear and nonlinear aspects of IMRT.

“Thanks to SeDuMi, we can now solve optimization problems that are much bigger and more complicated than ever before, and we can solve them much more quickly,” says Terlaky, who directed McMaster University’s School of Computational Engineering and Science in Ontario before joining Lehigh’s faculty in 2008.

“Fifteen years ago, there was no way to solve these problems. SeDuMi has helped us solve a small fraction of them.”

Invisible adversaries

Only a minority of Internet users, says Brian Davison, click past the first page of a list of search results, and barely 10 percent make it to the third page. Hundreds of millions of searches are conducted daily and a query can yield millions of results. Factor in human nature and you have the ingredients for an invisible and adversarial contest: While search engines seek to provide accurate results for clients, shady content providers use link-bombing, blog spam, comment spam and other gimmicks to falsely boost their rankings.

The phenomenon has given rise to a field of study called adversarial information retrieval (IR), which helps search engines identify Web sites that manipulate search engine rankings. Adversarial IR researchers have held five annual AIRWeb workshops; Davison, an associate professor of computer science and engineering, organized the first three and gave the keynote address at AIRWeb (2009 in Madrid, Spain).

Davison collaborates with researchers from Yahoo, Google and Microsoft to improve the quality of search engine rankings. In his work, which is supported by an NSF CAREER Award and by Microsoft, he writes algorithms that perform contextual link analysis to help search engines achieve more accurate rankings. This type of analysis gauges the reputation of a Web site by evaluating the sites from which it is linked.

“If 50,000 pages match a query,” says Davison, “which are most authoritative? Search engines track the number of times Web page authors link to a page. We try to be more intelligent by factoring in the topics of the sites that link to the pages that come up in a search. We determine this by following links on the Internet. The links that a Web site chooses are regarded by search engines as expressions of popularity.”

Davison analyzes hundreds of millions of Web pages. Hoping to push that number to one billion, he has assembled dozens of hard drives and tens and eventually hundreds of terabytes of storage. “To follow the Web in a believable way, we work with everlarger collections of data. As a result, we need machines with lots of memory. We can perform our calculations much faster if our entire set of data fits in the memory.”

Fighting search engine spam, Davison said at AIRWeb 2009, is like playing a high-stakes game of chess against an opponent who constantly changes the rules.

“The Web is becoming the sum of human knowledge. It’s vital to know what information can be considered true or objective.”