The robots of the future could do important work in disaster areas. They could deliver aid, search for survivors, and provide intelligence on the scope of structural damage and human casualties, which are scenarios where it might be dangerous to send human operators.

But today’s autonomous robots don’t yet have that intelligence. Specifically, they don’t have adequate capability to understand, and act upon, risk and perception uncertainty.

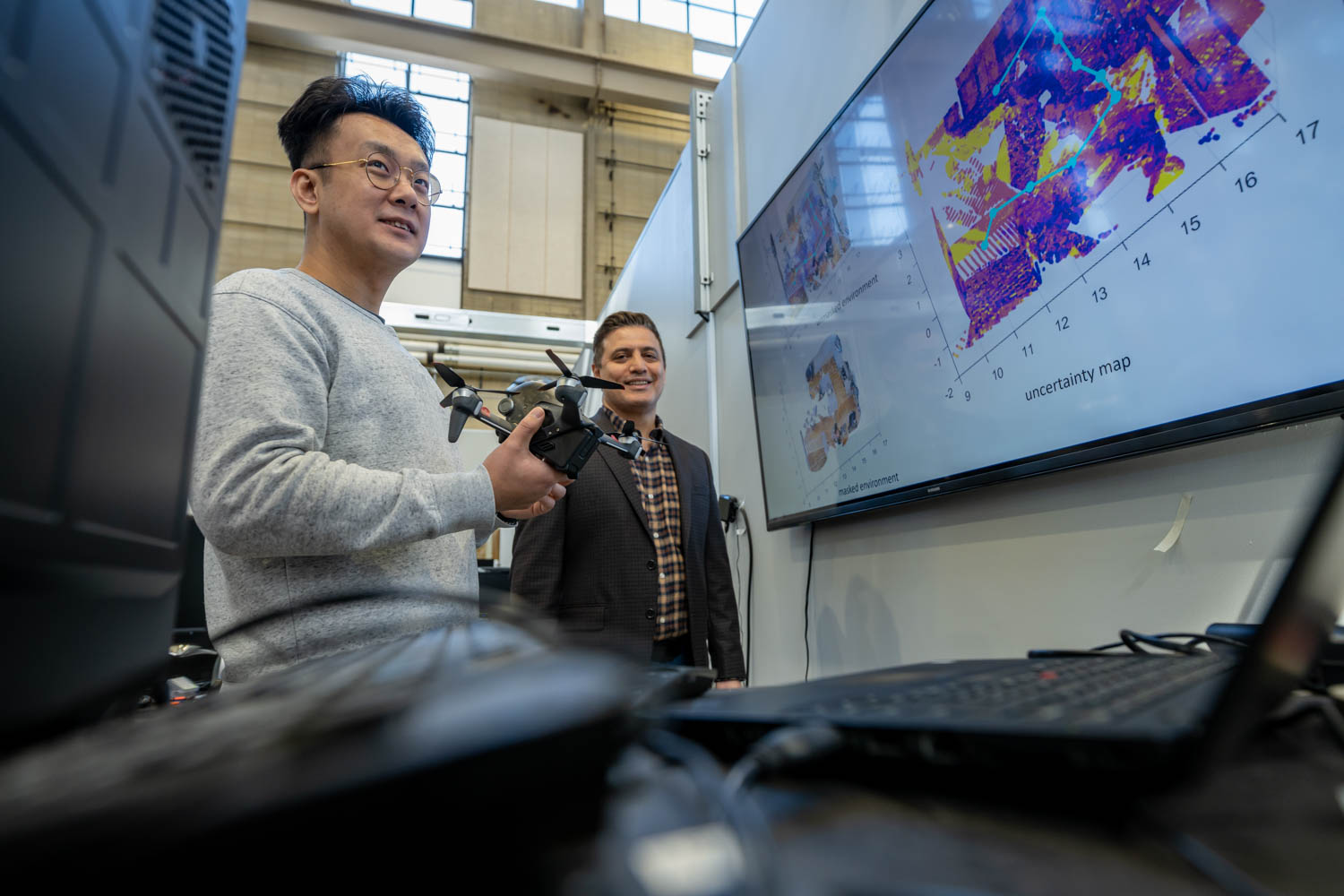

“As human beings, we face a lot of perception uncertainties in our daily lives,’’ says Guangyi Liu, a PhD student in the Department of Mechanical Engineering and Mechanics (pictured above, right). “Over time, we learn from our experiences and find out risk-aware philosophy to mitigate these uncertainties. For example, if the weather is foggy, we’ll drive slowly and carefully and make safe decisions with impaired visual inputs. Robots, however, still need to learn from their experience and learn to deal with the perception uncertainties. And we have to teach them via the establishment of risk-aware perception, decision-making, and control.”

As part of his research on risk analysis in robot perception systems, Liu developed the theory that guides the robots to react safely to uncertain situations more like their human counterparts would. These theories help the robots decide if they should trust the noisy measurements and data, and how thrust-worthy they are.

“Then, based on the evaluated risk level of the uncertain situation, the robot can say, ‘I’ll choose the action that is associated with the lowest risk among all potential actions despite my measurements being noisy and less informative’” says Liu, “And the robot will safely traverse the uncertain environment and make more ‘aggressive’ actions once the situation gets less uncertain.”

Over the course of his PhD studies with advisor Nader Motee (inset photo, right), a professor of mechanical engineering and mechanics and the director of Lehigh’s Autonomous and Intelligent Robotics Laboratory (AIR Lab), Liu and his colleagues have developed a formal framework, which can be applied to any robotic system, that will allow the robot to act safely with uncertain perception measurements and inputs.

Over the course of his PhD studies with advisor Nader Motee (inset photo, right), a professor of mechanical engineering and mechanics and the director of Lehigh’s Autonomous and Intelligent Robotics Laboratory (AIR Lab), Liu and his colleagues have developed a formal framework, which can be applied to any robotic system, that will allow the robot to act safely with uncertain perception measurements and inputs.

“What’s particularly exciting about our research is that we build the bridge between the safety and the perception uncertainty and we also provide guarantees,” he says. ‘’The problem is extremely important and it is not easy to solve. That’s why we need to bring it to all the other peer researchers and reach out for more collaborations.’’

To this end, Liu has taken an active role in the broader robotics and control community by serving as an organizer for both an IEEE American Control Conference (ACC) workshop in 2023 and three ACC invited sessions.

“For the ACC conference in July, we’ll host two invited sessions on addressing the related problems in the risk-aware robotics and control systems,” he says, “in which we have reached out to our peer researchers and invited them to submit and present their technical papers related to these topics.”

Each invited session includes six papers from various research groups, and those papers represent the latest advancements in the field of risk-aware planning and control. As the organizer, Liu has been responsible for seeking out and inviting those investigators to submit their papers. It’s a labor of love of sorts that Liu makes time for around his own rigorous research schedule, his impending graduation, and decisions on where to pursue a postdoc.

“The workshops and sessions are a lot of work to organize and they are extremely time-consuming,” he says. “But they provide an amazing opportunity to bring researchers who are interested in these topics together. And it’s that kind of proximity that sparks new ideas and new collaborations to tackle this challenge and pushes our field further.”

—Story by Christine Fennessy; photography by Christa Neu