LiDAR (shorthand for “light detection and ranging”) has been hailed as a game-changer in the race toward developing a truly “driverless” car, or what the Society of Automotive Engineers defines as a Level 5 autonomous vehicle (AV).

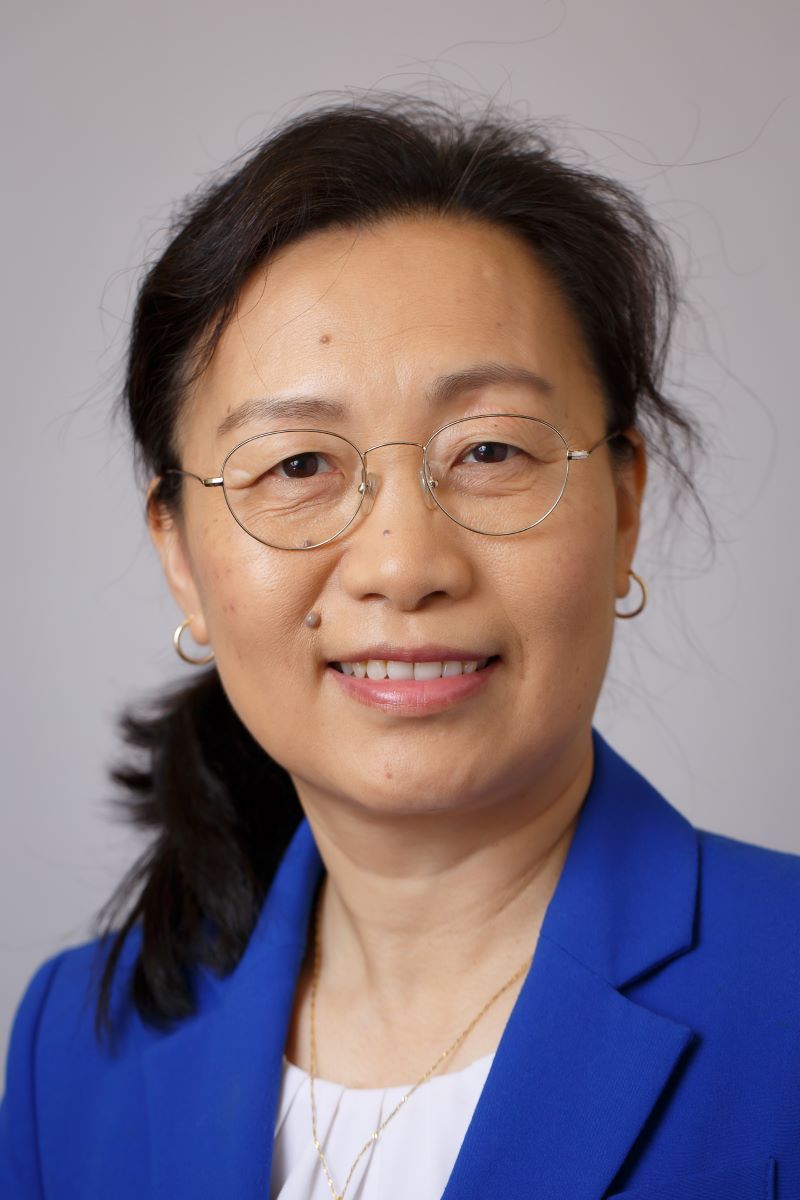

“The technology is similar to radar and sonar, in that the device sends out a pulse, which bounces back signals to detect obstacles,” explains Yahong Rosa Zheng, a professor of electrical and computer engineering. “LiDAR uses a narrow beam of light to very quickly scan the area and create a ‘point cloud’ that provides highly accurate range information.”

Fed into an algorithm, LiDAR data—which is more manageable in size and requires less computation than what’s captured by RGB cameras—allows the vehicle to accurately perceive its surroundings and navigate a clear, safe path.

Zheng, whose research focuses on underwater wireless communication, has recently integrated 2-D LiDAR into her course on Robotic Perception and Computer Vision, in which she teaches graduate and undergraduate students specialized programming methods for controlling robots. The hands-on, project-based class utilizes the F1TENTH Autonomous Vehicle System, an open-source platform launched in 2016 by the University of Pennsylvania.

“Each project builds on how to program the cars, looking at different algorithms for tasks like reactive methods, path planning and autonomous driving,” says Zheng. “We really get into the science and the equations and give students an in-depth understanding of the math behind the hands-on part of the class, which is racing the cars.”

In Spring 2020, she and her students built 11 of the vehicles, which are one-tenth the size of an actual Formula One car. Although the pandemic forced a pivot to simulated races, the students were able to take part in a socially distanced “play day” in a campus parking garage, testing out the vehicles on a homemade track.

“We’re teaching students the basics of the programming involved with AVs, while giving them the chance to see that there are a lot of research opportunities in this area,” she says. “Many of the companies working on autonomous driving are at Level 3 or not yet reaching Level 4,” she adds. “Nothing is set in stone yet.”

Zheng’s assessment is borne out by research heading in the opposite direction—away from LiDAR—led by computer science and engineering professor Mooi Choo Chuah.

In their quest for greater profitability and wider market adoption, Chuah says, makers of AVs must weigh the advantages of LiDAR against its relatively high cost in comparison to cameras. There is also debate over whether newer RGB-D camera technology (which provides depth information) combined with advances in deep learning could eventually close the gap in computer vision capabilities.

Chuah is exploring that possibility in a project funded by the NSF to develop a robust perception system for AVs, using cameras and smarter sensors, with lower computational demands. Her work on developing an efficient, cost-effective, and secure system—starting with algorithms for object detection and tracking, and later, moving on to trajectory prediction—could help integrate AV-related technology more widely into society.

“Everybody talks about 5G providing us with tons of bandwidth,” she says, “so why not put a superfast computer in every car so that we can just connect to the cloud? With AVs, we have to be able to handle all sorts of scenarios: What if you drive through a tunnel or a city full of tall buildings and lose your 5G connection? This is why we’re developing an innovative end-to-end perception system using deep learning models that will be able to process video frames more efficiently and make quick and accurate decisions to control the car, even in atypical situations.”

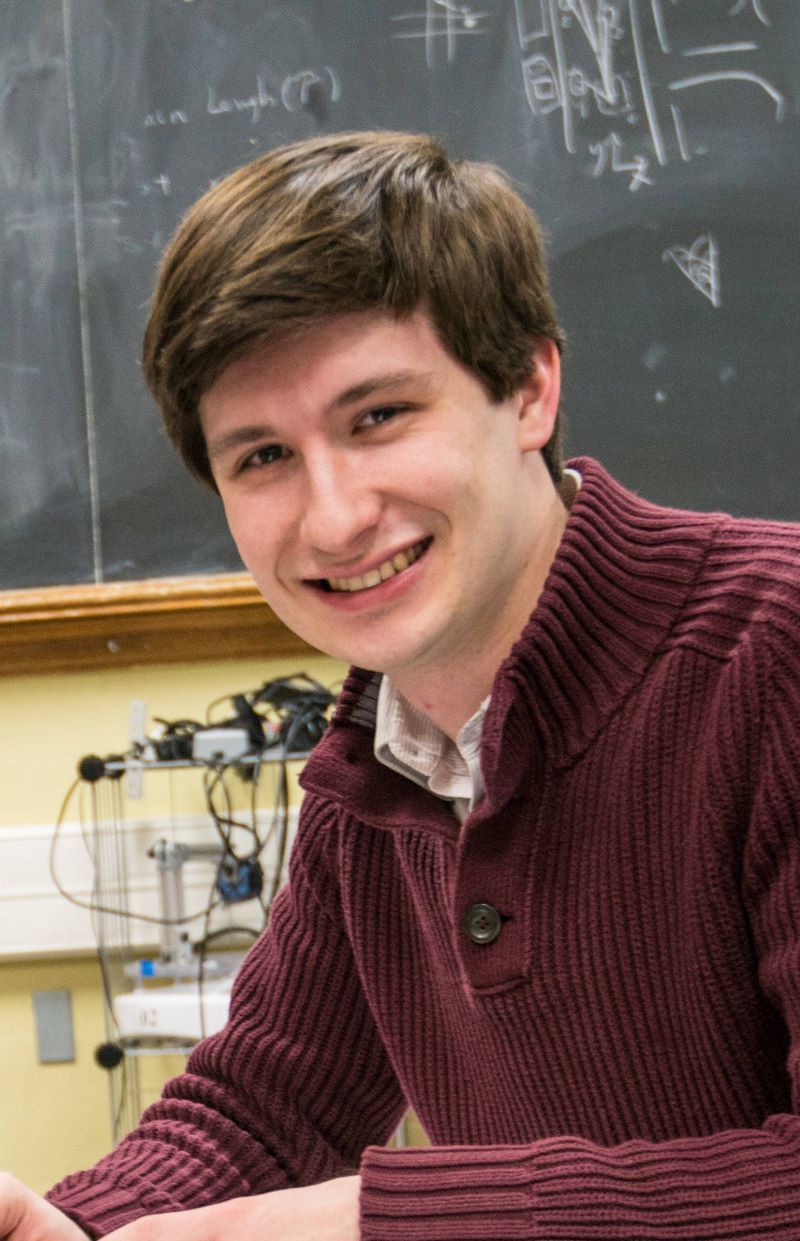

To test the system, Chuah is collaborating with Corey Montella ’19 PhD, a professor of practice in the computer science and engineering department. Using another open-source robotic car platform—NVIDIA’s Jetson Xavier NX—Montella is leading undergraduate capstone courses focused on customizing the vehicles to make them faster and more dynamic.

Although the bots will start out using an existing vision-based navigation system, Chuah and Montella hope to integrate the perception system she’s working on to eventually create a test bed and provide proof of concept.

“The idea that we can have AVs without LiDAR might seem far-fetched to some people,” says Montella, “but there’s an existing RGB system that works to navigate cars: Our brains and our eyes gauge distances just fine, without precise location information. There should be a way to do this with just cameras, taking a more natural, less engineered approach. The challenge is figuring how to replicate our common sense in a machine.”

—Katie Kackenmeister, assistant director of communications, P.C. Rossin College of Engineering and Applied Science