If there’s a holy grail in robotics, it’s syncing what machines perceive and how they act on that perception.

If there’s a holy grail in robotics, it’s syncing what machines perceive and how they act on that perception.

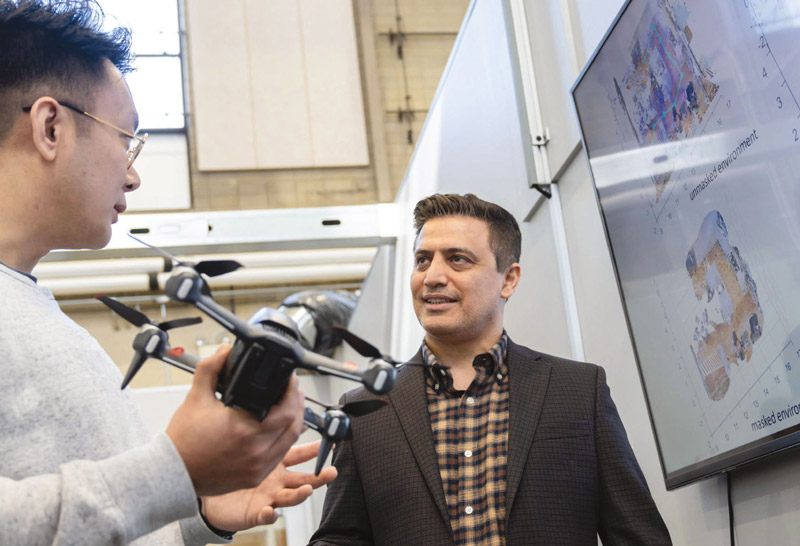

“The ultimate goal for researchers is intelligent autonomy,” says Nader Motee, a professor of mechanical engineering and mechanics and director of Lehigh’s Autonomous and Intelligent Robotics (AIR) Lab. “And that means bridging the divide between what robots sense and the actions they take.”

The approaches that Motee, his colleagues, and students are taking—through research, competitions, classes, and clubs—are positioning Lehigh as a respected hub for the study of robot autonomy. AIR Lab members are tackling challenges involving robots’ ability to carry and manipulate objects, interpret information to make navigation decisions, and adapt to changing operating conditions and environments.

“We’re constantly pushing the boundaries of what’s possible in the field,” he says.

A ‘knot-so-typical’ approach

A ‘knot-so-typical’ approach

When we think about drones, we tend to think about Amazon. But their potential is much greater, and arguably far more important, than dropping off a box of laundry pods by lunchtime (an idea that’s struggled to take off since Jeff Bezos floated it more than a decade ago).

Aerial robots could be a huge asset, saving time, money, and workers’ well-being, in industries like construction where humans often have to heft materials up multiple floors, says David Saldaña, an assistant professor of computer science and engineering. They could also deliver lifesaving supplies in disaster areas. “The goal would be to get to a point where people don’t have to touch the robot at all,” says Saldaña. “Instead, we could just tell the robot to pick up that box of medicine and deliver it where it is needed.”

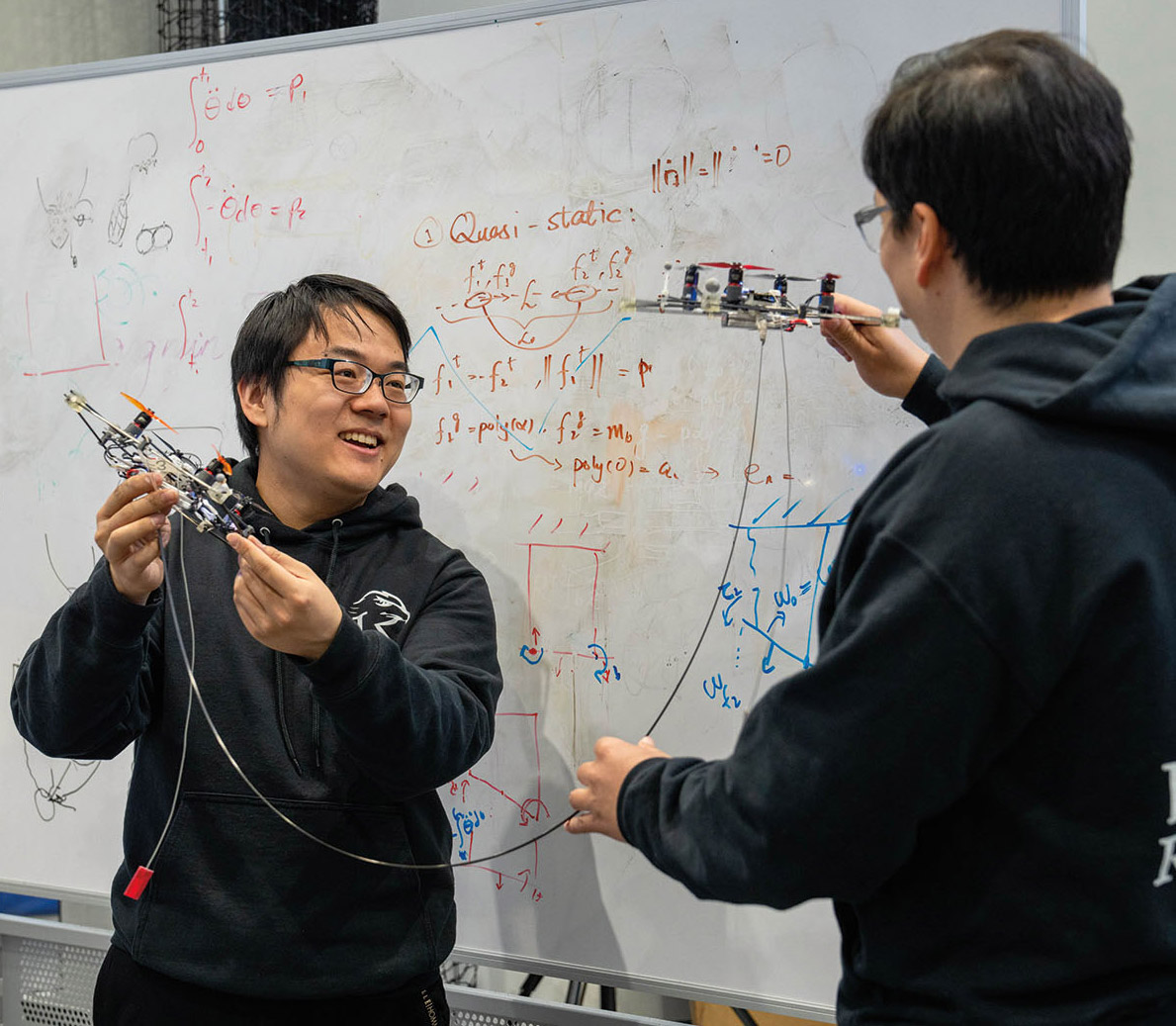

Traditional aerial systems in the literature have centered on robotic arms for autonomous grasping, which are heavy and hard to fly with as they change the drone’s center of mass. Weight-saving solutions have included using an origami-like construction and very small motors. Saldaña and his team were recently awarded a three-year, nearly $600,000 grant from the National Science Foundation for an idea that takes an entirely different approach: using cables, knots, hitches, and often multiple robots to move objects in the air.

The project involves developing algorithms that will allow drones to overcome friction and actually tie a knot in a cable without getting entangled themselves. “We can’t use the traditional reinforcement learning algorithms for this,” he says. “We have to make our own because they have to learn very fast, since robots in the air need to operate fast.”

The team will work with Subhrajit Bhattacharya, an assistant professor of mechanical engineering and mechanics, who will do the topological planning that will allow the robots to adapt to the specific requirements of securing individual items, like a chair versus a table. (Topology is the mathematical study of properties preserved through the twisting, stretching, and deformation—but not the tearing—of an object.)

The team will also be using hitches. “We have a new concept called a polygonal hitch,” says Saldaña. “Pairs of robots can make one side of the polygon, and are in charge of that side only. The polygon can be scaled up or down depending on the size of the object you’re moving.”

While the ultimate goal is to create a more efficient aerial delivery, the sheer number of problems that need to be solved first means that Saldaña will be happy to eventually perfect the transport of two items: a basketball and a solar panel. “Both will require a mesh where the cables are held together by friction, not with knots. And that means using multiple robots to interlace multiple cables, which is a very difficult task right now for robots. But this is a completely new way to look at transportation in aerial robots.”

Road-testing algorithms

Self-driving cars must follow the rules of the road while avoiding collisions. But as with humans, robots have a harder time seeing things that are far away. They often receive only partial information from distant road signs and markings. Knowing at what point to react to that information—and whether the amount of information is sufficient to require the action—presents a complex challenge.

Cristian-Ioan Vasile, an assistant professor of mechanical engineering and mechanics, and his team recently published a paper outlining a framework for perception-aware planning in self-driving cars. “Our algorithms allow actions to be taken by the vehicle based on partial symbolic information,” he says. “And using simulations, we saw a reduction in the risk of collision. The vehicle saw something, and while it may not have known exactly what it was, it knew enough to slow down.”

Formalizing their approach, however, wasn’t easy.

Formalizing their approach, however, wasn’t easy.

“There’s a discrepancy between perception coming from robot vision versus planning and control,” he says, “where the expectation is either that perception is perfect and you can make whatever decisions you need to make, or you assume the worst and perception is very uncertain, which makes control very hard. If you expect too much from the perception system, it’s going to lead to safety issues. On the other hand, if you try to take all possible uncertainty into account, your control algorithms will be very slow, and you risk veering at the last minute, or even colliding with obstacles. We had to construct a middle ground between the two.”

The team extended their approach in another paper that focused on robots deployed to disaster scenarios and on Mars—simulations in which the goal was to efficiently explore an unknown environment where energy budgets are limited. In such cases, the framework they developed allowed the vehicles to operate autonomously despite receiving only partial information. For instance, they could pursue a search for victims who are more seriously wounded than others, and navigate terrain that could otherwise bog down or halt a space mission.

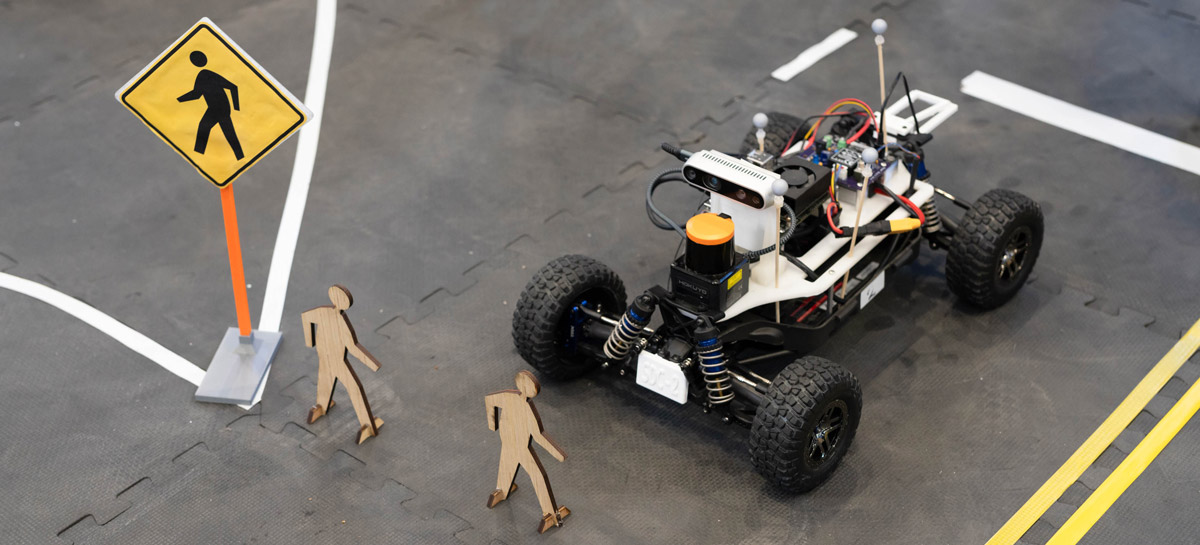

The next step, says Vasile, is to test their algorithms in a version of the real world. Beginning this spring, he and his team will run experiments using race cars in a miniature urban environment located in the AIR Lab. The platform was built by a team of undergraduates participating in Lehigh’s Mountaintop Summer Experience program. The students have been working on the testbed since 2020, and it now features buildings, streets, traffic lanes and markings, and a localization system, similar to an indoor GPS. Vasile and his team will conduct their experiments using five miniature race cars equipped with sensors.

“We want to see how well the perception model and control algorithms we’ve been developing performs in urban scenarios where you have intersections, lights, and signs, and have to interact with other cars,” he says.

The ultimate goal, however, is to ensure the safety of the newest fleet of technology to hit the roads. “The moonshot for this research is that it will allow us to connect guarantees about perception systems with guarantees about decision-making and control, so that together, we have guarantees about the behavior of robots and self-driving cars in particular.”

Navigating with ‘next best’

In the future, it may be common practice to use robots to deliver assets—medicine, supplies, tech of all sorts—to unknown places. There are the myriad issues around how machines will carry those items (the focus of Saldaña’s research) and how they will interpret information as they travel (Vasile’s work). On top of that, add the challenge of actually reaching their destination.

"When an asset-carrier robot navigates an environment using a perception model, there’s often a level of uncertainty about its surroundings,” says Motee. “This could be because the model was trained with a limited number of low-quality views. To mitigate the risk in decision-making and planning, the robot needs to enhance its perception of the environment. However, doing this in real time is a computationally demanding task."

"When an asset-carrier robot navigates an environment using a perception model, there’s often a level of uncertainty about its surroundings,” says Motee. “This could be because the model was trained with a limited number of low-quality views. To mitigate the risk in decision-making and planning, the robot needs to enhance its perception of the environment. However, doing this in real time is a computationally demanding task."

Motee has developed a way in which robots work in tandem to help the asset-carrier. These helper robots take samples and new views from the environment and feed the information to the carrier, allowing it to learn faster and update its perception of the environment.

“But then the question becomes, what is the next best view that a robot should use for improving its perception?” he says. “It’s like when you’re getting advice from several of your friends at once. You can’t do everything, so you decide to follow the one piece of advice that sounds the most relevant to your goal. It’s a similar situation with robots. They can’t use all the different views because that would be computationally expensive and cannot be done in real time. They have to make a decision about which is the next best view.”

Motee recently received funding from the Office of Naval Research to answer this question of control without trust. He and his team are combining computer vision with virtual reality to develop the algorithms that will help robots update their perception that reduces the uncertainty and risk posed by unknown environments.

“In an ideal scenario where we have complete knowledge about an environment, risk is nonexistent,” he says. “However, uncertainty introduces the possibility that an incorrect action could result in failure. In real-world applications, robots need to attain a trustworthy understanding of their surroundings efficiently, while staying within their computational constraints. Given that all perception models inherently contain some level of uncertainty, equipping them with online learning and perceiving can significantly enhance their environmental perception. Given the inherent uncertainty in all perception models, robots capable of rapid online learning can significantly enhance their understanding of the environment. This improvement not only reduces their level of uncertainty and associated risks but also enables them to more effectively and efficiently fulfill their designated tasks.”

Rising to the challenge

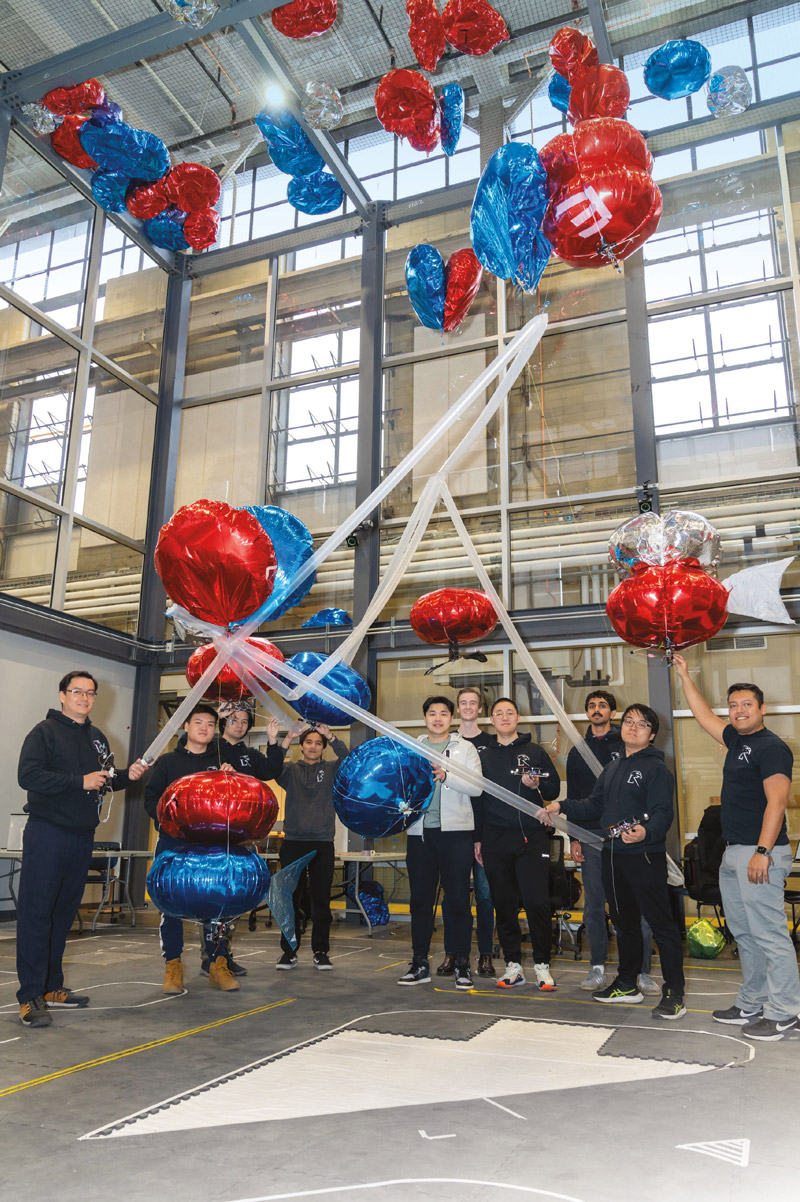

Accomplishing missions in the real world was the goal of last November’s Defend the Republic drone competition. The event was organized by the Swarms Lab (Saldaña’s lab) and collaborators with the support of Lehigh’s Institute for Data, Intelligent Systems, and Computation (I-DISC), the computer science and engineering (CSE) department, the Rossin College, and the Provost Office. It was Lehigh’s first time as host with the Mountaintop campus as the main venue.

Teams from eight universities participated in a Quidditch-esque game (that’s a Harry Potter reference for the unaware) in which autonomous aerial robots vied to capture floating helium balloons and deliver them to the opponent’s goal. The competition is held twice a year at universities across the country and aims to drive research and innovation in vehicle design, multi-agent control, swarm behaviors, and communication.

“Often in research, you run an experiment a couple of times, establish proof of concept, and that’s enough to publish a paper,” says Saldaña. “But in a competition environment, you have to really show that your robots are robust and reliable, again and again. It adds another level to the research process, and pretty quickly, you’re able to spot your strengths and weaknesses.”

Lehigh entered 21 robots into the competition, the largest contingent of any of the competitors, and ultimately made it to the final round, having experienced some glitches with their sensors reacting poorly to sunlight. (Teams from George Mason University, the University of Florida, and Baylor University took home top honors.) Such a show of technological strength was a testament to the Lehigh Aerial Swarms Robotics Club, a group Saldaña started in 2022. Although officially a club for undergraduates, Saldaña says that graduate students play a significant role serving as mentors.

Lehigh entered 21 robots into the competition, the largest contingent of any of the competitors, and ultimately made it to the final round, having experienced some glitches with their sensors reacting poorly to sunlight. (Teams from George Mason University, the University of Florida, and Baylor University took home top honors.) Such a show of technological strength was a testament to the Lehigh Aerial Swarms Robotics Club, a group Saldaña started in 2022. Although officially a club for undergraduates, Saldaña says that graduate students play a significant role serving as mentors.

“Students can pursue so many different interests within the club,” he says. “They can build robots and compete, or if they’re interested in research, they can join forces with our PhD students. We travel to events and conferences. The club is also connected with senior design projects and capstones, and it helps launch students into master’s and PhD programs.”

The group formed as an offshoot of a Mountaintop Summer Experience project Saldaña started in 2022 that explored using robots for social impact. In that project, students are studying how to use robots to deliver communication access points when typhoons cause widespread power outages.

“The students who are part of these clubs and projects are the engine behind so much of our success at events like Defend the Republic,” he says. “When they come into a research competition like that, they can’t have a simple idea. They have to think out of the box, and they need a clear goal. It’s a unique experience for them.”

To further stoke excitement for the competition and all it entails, Saldaña is teaching a new course in aerial robotics for undergraduates this spring.

“Very few universities have a class like this because it requires a lot of infrastructure and support,” he says. “But thanks to the CSE department and college leadership, we’re now one of the few.”

Such opportunities across research and practice will help propel the next generation of students to further push the boundaries of robotics and narrow the gap to true intelligent autonomy, says Motee.

“That’s really what the AIR Lab is about,” he says. “It’s about providing a place for collaboration and shared ideas for both students and faculty. The opportunities here are endless, and that’s how Lehigh is setting itself up to be a leader in the field.”