Resolve Magazine Fall 2023 >> Making Sense of Machine Learning >> Stories >> Designing Fair Algorithms...

![]() In machine learning, algorithms make the magic happen. Through a series of complex mathematical operations, algorithms train the model to essentially say, ‘Ok, this input goes to that output.’ Give it enough pictures of a cat (input), assign those pictures a label, “cat” (output), and eventually, the connection is made. You can feed the model images of felines it’s never seen before, and it will accurately predict—i.e., identify—what animal it’s “seeing.” (Computers don’t actually “see” the image, but rather numbers associated with pixels that mathematically translate into “cat” or “dog,” or whatever it’s being trained to recognize.)

In machine learning, algorithms make the magic happen. Through a series of complex mathematical operations, algorithms train the model to essentially say, ‘Ok, this input goes to that output.’ Give it enough pictures of a cat (input), assign those pictures a label, “cat” (output), and eventually, the connection is made. You can feed the model images of felines it’s never seen before, and it will accurately predict—i.e., identify—what animal it’s “seeing.” (Computers don’t actually “see” the image, but rather numbers associated with pixels that mathematically translate into “cat” or “dog,” or whatever it’s being trained to recognize.)

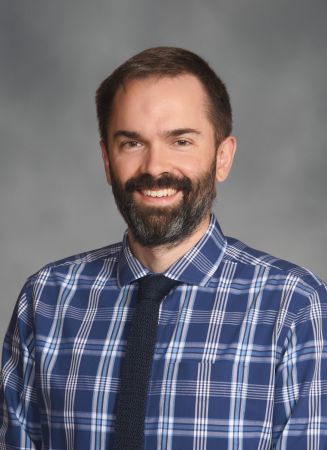

“When we’re born, our brains don’t have all the connections that tell us how to identify a cat,” says Frank E. Curtis (pictured), a professor of industrial and systems engineering. “We learn over time, through observation, through making mistakes, through people telling us, ‘This is a cat.’ Learning to make that connection is essentially an algorithm that we follow as people.”

Taking learning to walk, for example. Our brains’ algorithm tells us to move forward in a certain way. If we don’t fall, we know we did something right, and we’re ready to take the next step. If we trip, we try to correct what we did wrong so we don’t fall the next time. The process continues until we’ve mastered the skill. “The algorithm is just a process of updating the model over and over again,” says Curtis, “and trying to move it toward getting a higher percentage of correct predictions based on the data you have.”

Taking learning to walk, for example. Our brains’ algorithm tells us to move forward in a certain way. If we don’t fall, we know we did something right, and we’re ready to take the next step. If we trip, we try to correct what we did wrong so we don’t fall the next time. The process continues until we’ve mastered the skill. “The algorithm is just a process of updating the model over and over again,” says Curtis, “and trying to move it toward getting a higher percentage of correct predictions based on the data you have.”

Curtis’s research focuses on the design of algorithms—how to make them faster, more accurate, and more transferable across tasks, like a single algorithm that can do speech recognition across multiple languages. He’s also recently started studying how to make them more fair. In other words, how to design them to make predictions that aren’t influenced by bias in the data.

“There isn’t going to be a perfect algorithm because there isn’t going to be a perfect sense of what’s fair and what’s not,” he says. “It’s also important to remember that humans don’t always do the best job either in making fair decisions.”

The ramifications of bias are real. Take an algorithm designed to determine who gets a loan. The model is fed data that contain features, essentially bits of information. When it comes to fairness, Curtis says, the goal is to ensure that certain features aren’t overly influencing the model’s prediction. So, let’s say race is scraped from the data. The model is then trained on data that might include other information, such as home addresses that correspond with certain populations.

“Even though you took the specific feature of race out,” he says, “there still might be other features in the data that are correlated with race that could lead to a biased outcome as to who gets a loan and who doesn’t.”

Curtis wants to figure out how to guide algorithms to better balance the objectives of accuracy and fairness when building machine learning models. This notion of “balance” is essential because as long as bias of any amount exists in the data, he says, there’s no way to build a model that’s both fair and accurate. To do that would require incorporating fairness measures that are within socially acceptable amounts, a move that would require regulation of the algorithms themselves.

“Most governments haven’t done that yet, but that would give us a guideline to work with,” he says. “I can’t tell you what the prescribed amount of bias should be—that’s the job of policymakers. But if someone could tell me, I could design the algorithm to get us there.”

Main image: atdigit/Adobe Stock