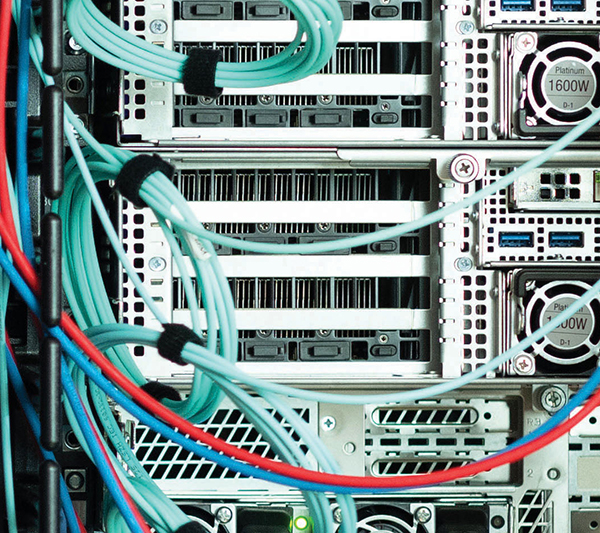

High-performance computing systems power the complex calculations and simulations behind our data-driven life—from better weather prediction to improved disease detection. Even the sophisticated supply chains that ensure Cyber Monday deals are delivered to your doorstep in two days depend on them.

To do all that requires very large machines that can handle vast amounts of data efficiently, says Roberto Palmieri, an assistant professor of computer science and engineering. It’s a challenge, he explains, because unlike a typical laptop, which has four or eight processors housed inside one chip’s processing unit, a supercomputer has hundreds of processor cores divided across several chips.

“When you execute a program on your laptop and it requires accessing data in memory, the latency time needed to retrieve the data is the same no matter which core you are executing from,” he explains. “But if you have hundreds of computer cores, then finding a specific piece of data is akin to finding a particular object in a town filled with many buildings. Someone looking for an object that’s not in their own house must spend time traversing a relatively extensive path to find it.”

“When you execute a program on your laptop and it requires accessing data in memory, the latency time needed to retrieve the data is the same no matter which core you are executing from,” he explains. “But if you have hundreds of computer cores, then finding a specific piece of data is akin to finding a particular object in a town filled with many buildings. Someone looking for an object that’s not in their own house must spend time traversing a relatively extensive path to find it.”

Palmieri and Scalable Systems and Software (SSS) Research Group collaborator Michael Spear, an associate professor of computer science and engineering, have found a way to meet the need for speed by avoiding that journey altogether. Their novel method of building efficient data structures ensures that applications interacting with a computer’s data structure no longer need to contact other computing units to access the desired data.

The resulting increase in processor speeds could significantly improve the performance of any application that organizes large amounts of data, such as security tools or massive databases.

“Literally any complex application that requires data management can use this structure,” says Palmieri. “And because the software is open-source, anyone in the world can use it.”

Using a computer with 200 cores distributed across four different processors, the team proved that when key metadata governing core memory is replicated across computing units, memory operations simply access memory in their local nonuniform memory access (NUMA) zone, and so perform at far greater speeds. It’s a shortcut—an index that links directly to the next step and avoids the full journey.

“With this approach, we are able to provide well-known data structure designs with performance in the range of 250 million operations per second on a data structure with 100 elements,” Palmieri says.

The team observed speeds more than 10 times faster than normal on a data structure with 256 elements and 160 parallel threads operating on it. Those threads performed typical operations on the data structure, such as inserting, deleting, or looking up an element. Each of these operations involve many read/write memory accesses.

Innovations like this one, Palmieri explains, are a credit to the collaborative nature of the SSS group, which he founded with Spear in 2018 following a three-year, $500,000 grant from the National Science Foundation. Since then, faculty members and numerous graduate and undergraduate students have joined. The team is also exploring energy-efficient architectures, speculative parallelization, and transactional memory.