As you waste your lunch hour scrolling through cat videos, snarky celebrity-bashing memes, and videos from your niece's third birthday party, for just a moment consider the majesty of the system behind the screen that enables such lightning-fast access to literally everything under the sun.

When users request files, images, and other data from the internet, a computer's memory system retrieves and stores it locally. In terms of a computer's ability to quickly process data, a relatively small hardware storage called cache is located close to the central processing unit, or CPU. The CPU can access data more quickly than fetching data from the main memory, or over the open airwaves of the internet.

Data is often gathered into cache in chunks. Based on a principle called locality, when a user accesses one bit of data, the system fetches the adjacent block of data. This happens, in theory, to improve efficiency—in case additional local data is requested in the future, or you decide you need to watch that cat falling into the fishtank one more time—cache makes it happen instantaneously.

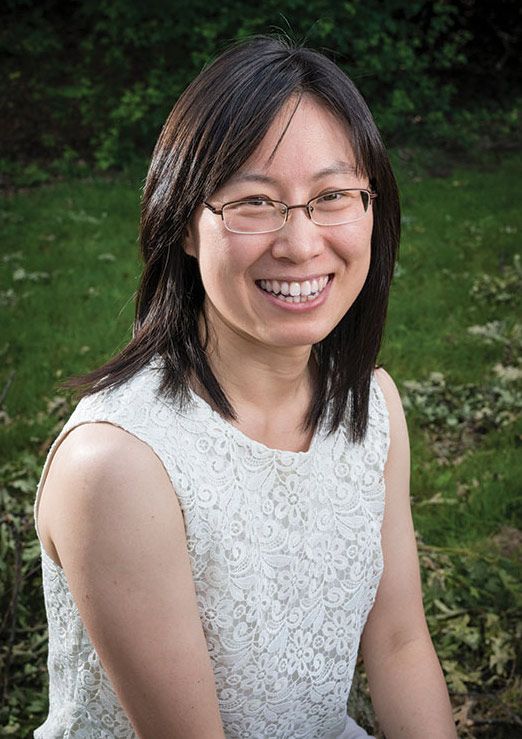

But as applications become ever more complex and user behavior becomes increasingly random, says Xiaochen Guo, P.C. Rossin Assistant Professor of Electrical and Computer Engineering, fetching a large chunk of data becomes a waste of energy and bandwidth.

As she explores ways to solve this conundrum, Guo is supported by prestigious Faculty Early Career Development (CAREER) Award from the National Science Foundation (NSF), granted annually to rising emerging academic researchers and leaders who serve as role models in research and education.

“Our goal is to improve data movement efficiency by revamping memory systems to proactively create and redefine locality in hardware,” she says. “New memory system designs through the project hold the potential to unlock fundamental improvement, and we believe this may in turn prompt a complete rethinking of programming language, compiler, and run-time system designs."

Identifying patterns and reducing overhead

Identifying patterns and reducing overhead

Most previous attempts at solving this issue, Guo says, focused on increasing memory overhead. Eventually, such a system will use more and more storage for metadata, which simply identifies stored memory.

"Reducing metadata is a key focus of our work," says Guo, who is also a two-time winner of IBM’s prestigious Ph.D. Fellowship. "Memory overhead translates directly to cost for users, which is why it's a major concern for hardware companies who might be considering new memory subsystem designs."

Guo's new design will resolve the metadata issue by identifying patterns in memory access requests.

"In a conventional design, the utilization of the large cache block is low—only 12 percent for even the most highly optimized code," she says. "With this work, we are looking more closely at correlations among different data access requests. If we find there's a pattern, we can reduce the metadata overhead and enable fine-granularity caches."

Reading a picture pixel by pixel is generally a simple enough task for a computer, and locality turns out to be fairly good. But when a system runs multiple tasks at once or performs something fairly complex—think about the random scrolling and clicking that defines your social media habits—Guo's designs can enable hardware to learn and eventually predict patterns based on past and present behavior. When these predictions are perfected, the system will fetch and cache only the most useful data, and thus far preliminary results have been encouraging.

"For our preliminary work on fine-grained memory, the proposed design achieves 16 percent better performance with a 22 percent decrease in the amount of energy expended compared to conventional memory," she says. ”This is an especially important topic when it comes to machine learning. The entire community is looking at how to accelerate deep learning applications. Improving the way that memory systems recognize complex patterns will improve the performance and scalability of deep learning applications, enabling larger models with higher accuracy to be run, quite quickly, on smaller devices. This allows for a highly-desirable combination of increased application performance and decreased energy consumption.”

Growing computers in petri dishes

Will the computers of tomorrow be manufactured, or will they be cultivated?

This question lies at the heart of a team-based project Guo is undertaking that aims to engineer a neural network—a computer system modeled on the human brain and nervous system—from actual living cells, and program it to compute a basic learning task.

“Recent developments in optogenetics, patterned optical stimulation, and high-speed optical detection enable simultaneous stimulation and recording of thousands of living neurons,” she says. “And scientists already know that connected biological living neurons naturally exhibit the ability to perform computations and to learn. With support from NSF, we are building an experimental testbed that will enable optical stimulation and detection of the activity in a living network of neurons, and we’ll develop algorithms to train it.”

The team, which includes Lehigh colleagues Yevgeny Berdichevsky and Zhiyuan Yan, brings together complementary expertise in computer architecture, bioengineering, and signal processing. In their project, images of handwritten digits are encoded into what are called "spike train stimuli," similar to a two-dimensional bar code. The encoding of the spike train will then be optically applied to a group of networked in vitro neurons with optogenetic labels. The intended impact of this work is to help computer engineers develop new ways to think about the design of solid state machines and influence other brain-related research.

Guo says that these two projects are representative of her lab’s work in her exploring what’s next in computing architectures and platforms.

Says Guo: “As the computing industry reaches the fundamental limits of Moore’s Law and is challenged to find ways to continue innovating, we need to improve the technologies we have today—and we need to explore revolutionary ideas that will enable the forward march of progress tomorrow.”