Our day-to-day lives rely on stable infrastructure and a continuous flow of energy. We want to get to where we are going, turn on the lights, drink clean water, connect with friends—without interruption and without giving it a second thought.

With so much riding on the systems that hum in the background of our society, we expect they are constructed, maintained, upgraded, and rebuilt in a straightforward, rational way, based on science, engineering, economics, and sound public policy.

If only it were so simple.

When a subway tunnel collapses, cell phone service cuts off, or some other system failure occurs, the questioning begins. Why did it fail? Why was it poorly maintained? Why were we unprepared for a catastrophe? Why is it so hard to get back to normal?

“Resilience is a measure of how fast we can restore the functions of these systems after a damaging event.” says Richard Sause, Lehigh’s Joseph T. Stuart Professor of Structural Engineering. “The goal is clear. For example, a storm comes, the power goes off, your phone stops working, and the roads are impassable. Everyone wants it fixed, ASAP. It would be even better if the damage never happened at all.

“Resilience is a measure of how fast we can restore the functions of these systems after a damaging event.” says Richard Sause, Lehigh’s Joseph T. Stuart Professor of Structural Engineering. “The goal is clear. For example, a storm comes, the power goes off, your phone stops working, and the roads are impassable. Everyone wants it fixed, ASAP. It would be even better if the damage never happened at all.

“Unfortunately, systems like power and transportation often depend on each other. You can’t repair the power without transportation, and vice versa,” he explains. “Perhaps more importantly, we would like to think that the processes and decisions to maintain and restore these systems are driven by things that engineers can measure, calculate, or observe. But in reality, these processes and decisions are driven by people, both as individuals and as communities.”

Understanding how humans and society influence the risks surrounding infrastructure and energy systems—and how they cause damage and address the need for restoration—is a challenge that goes beyond engineering. A 360-degree view of the problem requires expertise from fields such as sociology, psychology, and economics.

“Clearly, these are expansive, complex issues,” Sause says. We need perspectives from many disciplines to find effective solutions.”

Creating an intellectual space for addressing these complex issues—and nurturing large-scale research initiatives as they flourish—is among the top priorities for Lehigh’s new Institute for Cyber Physical Infrastructure and Energy (I-CPIE).

“We chose cyber physical infrastructure and energy as the focus because of the sheer magnitude of their importance to society,” says Sause, who serves as director of I-CPIE, which shares with its sister research institutes a mandate to attack persistent global problems.

“Infrastructure and energy are two important grand challenges that are closely linked from a systems perspective, and thus it makes sense to address them together. As it turns out, we have some unique capabilities in those areas at Lehigh, so it’s a natural fit.”

The university has a long-standing and well-deserved reputation in physical infrastructure, structural engineering, and power generation: The Advanced Technology for Large Structural Systems (ATLSS) Center is one of the largest and most renowned structural engineering facilities in the world, and Lehigh’s energy researchers have been producing seminal work in fields such as generation, delivery, and environmental mitigation for decades. The university has also made significant investments resulting in robust capabilities in cyber and data sciences.

The university has a long-standing and well-deserved reputation in physical infrastructure, structural engineering, and power generation: The Advanced Technology for Large Structural Systems (ATLSS) Center is one of the largest and most renowned structural engineering facilities in the world, and Lehigh’s energy researchers have been producing seminal work in fields such as generation, delivery, and environmental mitigation for decades. The university has also made significant investments resulting in robust capabilities in cyber and data sciences.

According to Shalinee Kishore, Iacocca Chair and Professor of Electrical and Computer Engineering and I-CPIE’s associate director, it is no secret that the nation’s infrastructure is in serious need of upgrades. The American Society of Civil Engineers gave the country a D+ grade on in its last infrastructure report card, citing a minimum funding gap of more than $2 trillion. On top of that, with the aftermath of Hurricane Sandy in the Northeast and other increasingly severe weather events, the race is on to find ways to protect infrastructure that is vulnerable.

“There are more than a dozen critical infrastructure systems in the U.S., which includes the power grid, water, transportation systems, and so on. The point is that it’s not a one-shot upgrade at which we can simply throw a couple trillion dollars and make everything OK,” she says. “You have to put in new systems and retrofit legacy systems, and all of them have to work together. And even if we install new infrastructure across the board, those systems begin to degrade the next day. We have to develop solutions that are resolvable over time, and we have to start now.”

Zero emissions, loads of data

One top-drawer project I-CPIE researchers are currently pursuing would put a significant dent in carbon emissions in California, where the Valley Transit Authority (VTA) in Santa Clara is going electric with its buses. The Lehigh-proposed system would rely on a microgrid infrastructure of interconnected, multipurpose hubs equipped with solar or wind energy converters, power storage units, and command and control systems. Significantly, this could provide a blueprint for future electrification across the State, as California has mandated that all mass transit systems run zero-emission bus fleets by the year 2040.

Kishore, a collaborator on the project, says that Lehigh’s recently-established Western Regional Office was a key asset in getting the promising proposal to the final round.

“The Western Regional Office helped us connect with VTA as well as the associated companies needed to deploy a system of this nature,” she says. “They were able to go out and talk about our institute and demonstrate connections between the research needs and our faculty expertise. Especially for complex team efforts like this, this kind of exposure and support is crucial.”

The planned system for the VTA comprises microgrid stations placed across the sprawling bus routes of the Santa Clara region, which would collect and store electricity for bus charging. The microgrids would be connected to the regional grid for power transfer.

The final component of the VTA plan is data. Lots of data.

“There will be real-time information on the power consumption of the buses, the condition of the roads, traffic congestion and the state of the power systems across the network,” says Hector Muñoz-Avila, Class of 1961 Professor, and co-director of I-CPIE’s sister Institute for Data, Intelligent Systems and Computation, or I-DISC. “The data will be combined and used by the system to distribute the power load, and the system will use algorithms to route and schedule the buses,” he says.

The system will be adaptive, and use a specific machine learning tool called reinforcement learning, which in simple terms is an information loop where you feed in data about the environment. Each time the system makes a decision, the results are fed back into the algorithm.

“This is a distinct family of machine learning, and it’s needed because the sequential decision making involved. The decisions that the system makes now will be informed by a decision from the past, and these will inform decisions that the system will make in the future, refining results and learning at each step.”

The electrification of the buses in California will put an increasingly large power draw on the main grid. The microgrids would increase the overall robustness of the grid, and would continue to operate even if other parts of the grid were to go offline due to natural or manmade events.

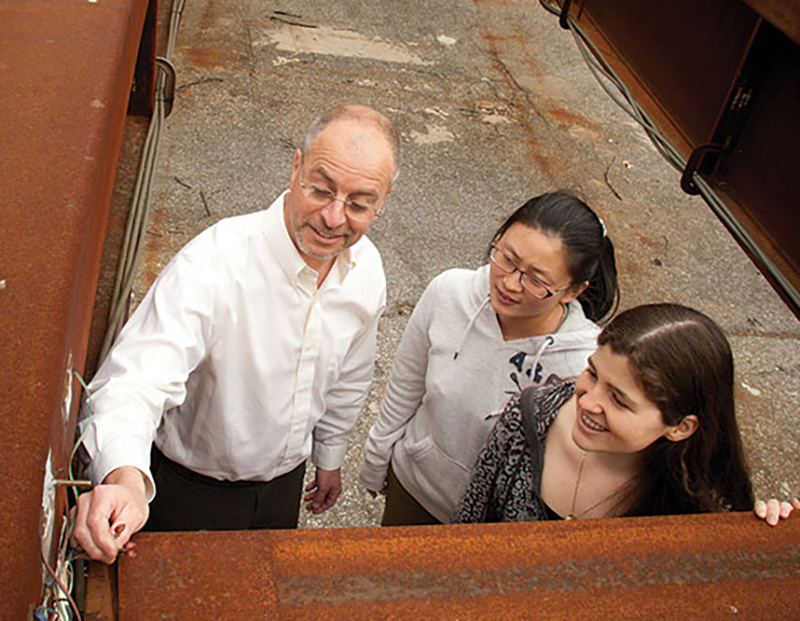

“None of this will occur in a vacuum, and there are a lot of things that need to be integrated,” points out Shamim Pakzad (photo), associate professor of civil and environmental engineering. “We have colleagues from urban planning and the social sciences helping us to understand how and why people make decisions as they use the system. There are specific events that make demands upon the system, such as sporting contests and musical performances that draw large numbers of people, and need to be considered in real time.”

“None of this will occur in a vacuum, and there are a lot of things that need to be integrated,” points out Shamim Pakzad (photo), associate professor of civil and environmental engineering. “We have colleagues from urban planning and the social sciences helping us to understand how and why people make decisions as they use the system. There are specific events that make demands upon the system, such as sporting contests and musical performances that draw large numbers of people, and need to be considered in real time.”

This is a classic example of the need for interdisciplinary collaboration, says Muñoz-Avila.

“I do a lot of research on reinforcement learning, but I really don't have much experience in energy for transportation systems. It’s not possible for machine learning algorithms to solve the entire problem by themselves. That’s why we have a team of people looking at optimization in the context of a transportation system, putting all the pieces together. That is the only way a project of this magnitude is feasible—people of different expertise working side by side.”

“With this bus system, we have the power generation and distribution system as well as the transportation network interacting directly,” says Pakzad. “That raises some interesting questions, some of which are practical and some are more fundamental as to how infrastructure systems will interface. But all of these projects are part of an effort to use data and other tools to improve the quality of life for people who live in cities and communities.”

Secure systems, connected communities

According to Liang Cheng, associate professor of computer science and engineering, there is an increased security risk when layers of cybersystems are added together to complement, support, and manage physical infrastructure and its usage. Vulnerabilities can be exploited by nefarious actors or by sheer circumstance; eliminating or protecting those gaps are at the core of his research in cybersecurity and cyber-physical systems, and distributed systems.

“If someone were able to hack the traffic lights of an intelligent transportation network, that is a textbook cybersecurity danger, and would obviously cause a serious problem,” he says. “One can quite easily imagine many similar possibilities.”

Cheng’s work focuses on real-time sensing, model-driven data analytics, and the Internet of Things. As an example, programmable logic controllers (PLCs) are critical components of automated industrial control mechanisms, processing data in real time to keep systems running smoothly. They are also attractive targets, and Cheng cites the Stuxnet attack on Iran’s uranium enrichment centrifuges as a famous example.

“The engineering workstation in that system was compromised, and the attackers were able to gain device programming privilege to alter the control programs running on the PLCs,” he says. “By first identifying PLCs that can be hacked and then modifying their control programs, they changed the speeds of the centrifuges and produced vibrations which destroyed them.”

Cheng and Mooi Choo Chuah, a professor of computer science and engineering, along with PhD student Huan Yang, have successfully designed and tested an automated protection system that uses time-based signal analyses to detect suspicious variances in a PLC’s command executions.

Cheng and Mooi Choo Chuah, a professor of computer science and engineering, along with PhD student Huan Yang, have successfully designed and tested an automated protection system that uses time-based signal analyses to detect suspicious variances in a PLC’s command executions.

“We insert checkpoints in the payload and modify the firmware to timestamp critical I/O and network operations,” he explains. “This allows us to find out how many milliseconds there are between different operations. If there’s an abnormal delay, a human operator is quickly alerted to inspect the issue.”

Some of Cheng’s other research has implications for the Internet of Things and blockchain-based applications, and provides rich possibilities for interdisciplinary work with social scientists, economists, healthcare professionals, and citizen scientists.

“Think about if you could monitor smart meters or share appliance information in the home,” he says. “Social service agencies fed that data could be informed if an elderly resident had not used a stove or turned on a television for an irregular period of time, and have someone look in.

“Crowd-sensing and crowdsourcing enabled by blockchain technologies are places where you could do a lot of good,” he continues. “For example, my team is studying the use of data from cars on less-traveled secondary and tertiary roads to notify authorities and other drivers of road conditions. It’s an interesting problem: there is a huge amount of data with a lot of noise that needs to be filtered to make the information useful.”

Cheng has also collaborated with Pakzad in creating an automated monitoring system for bridges.

“The work that I've done with Shamim is highly interdisciplinary, designing new computational and networking algorithms to process the data collected by wireless sensors on the bridge,” says Cheng. “Together, we devised a distributed and networked algorithmic system. Every monitoring node on the bridge does a computation to identify the local conditions, then forwards that information to the neighboring nodes. Previously, civil engineers had relied on a centralized process. This method is a more efficient way of assessing the overall condition of the bridge.”

Abundant energy, expertly extracted

Abundant energy, expertly extracted

Teams of I-CPIE researchers are also looking into ways to extract energy from the motion of water in the seas. According to estimates by the World Ocean Network, 50 percent of the globe’s population resides in coastal areas, and that figure could be as high as 75 percent by 2025.

Ocean wave energy generation has massive upside potential, and while there are high hurdles to surpass before that can be fulfilled, it is tantalizing to consider the possibilities. Marine hydrokinetic energy generation in the United States from combined ocean and tidal sources has the capacity to top 1,400 TWh annually, most of that ocean wave energy. That could provide up to 40 percent of the nation’s energy needs, says Arindam Banerjee, associate professor of mechanical engineering and mechanics.

“But these are very unforgiving and harsh environments,” cautions Banerjee, who has been working on the energy-water nexus with Kishore and other members of I-CPIE.

The current designs for ocean wave power generation use float and absorber, the float bobbing up and down with the waves in a piston-like movement, and the absorber remaining static, engaging a linear generator inside.

“What we are going through now is a design space shakeout,” says Banerjee. “Like with wind turbines, if you go back 40 years, you used to see a lot of different designs, and the industry has settled on a three-blade rotor design. That is what we’re doing with wave energy now, working towards the ideal design shape.”

Besides the design, there are complex physics and modeling problems to be solved to extract the maximum possible energy from a farm of generator buoys placed in the ocean. Banerjee estimates now that 30 buoys could eventually produce enough energy to power 300 households, but there is some highly intricate modeling required to reach that ideal.

“Energy convertors need to be very precisely controlled,” says Rick Blum, the Robert W. Wieseman Research Professor of Electrical Engineering. “One way that this can be achieved is to accurately predict future wave activity.”

Blum and collaborators have developed techniques and low-complexity algorithms for these predictions, based on measurements taken from sensors around the convertors. The team also characterized the negative impact of inaccurate predictions, along with other losses present in real systems.

Larry Snyder, professor of industrial and systems engineering, working with Blum and Banerjee, has been researching how to best situate the buoys and how to understand the dynamics of ocean water movement for maximum benefit.

“Think about a wave that hits a device and then transforms because of the device. The next device the wave hits will see the transformed wave and be able to absorb a different amount of energy because of the transformation,” Snyder explains. “That amount of energy can be either higher or lower than it would have been without the first device. The arrangement of devices therefore has a big impact on the total energy produced by a wave energy farm. We have been developing optimization models for addressing this problem.”

One advantage that ocean wave and tidal energy technologies have over renewables like wind or solar is that the energy production is more predictable. A surprise cloudy day or downtick in wind speeds can decrease the amount of electricity a solar array or wind turbine will produce. “If you're an independent power producer and you're going to be penalized for deviation, what is your optimal strategy?” asks Alberto Lamadrid, associate professor of economics with Lehigh’s College of Business, who is a partner on the project.

Lamadrid calculates that ocean wave energy generation is on average 15 percent more predictable than wind and solar energy, a significant advantage.

“One of the main factors you have to consider here is ancillary services, which is the way energy producers cover shortages when they can’t meet promised production levels.” he explains, “It’s akin to short-term insurance. If I can’t meet the energy coverage I promised, I need someone to help me cover the gap.”

Lamadrid believes ancillary services are currently undervalued domestically, and thus the reliability of hydrokinetic power generation offers an interesting opportunity in the energy market.

Besides reliability, there are myriad other economic factors a renewable energy producer must evaluate when linking to the grid, and deducing the best course of action is a tangled affair. Lamadrid’s work involves a dizzying balance of time parameters, market prices, storage capacities, and much more to extract the best course in any given moment for hydrokinetic generators to interface with the market.

Verdant Power, a New York-based company, will soon be putting the first underwater tidal turbine farm into the East River to capture the energy of tidal flows.

“This will be the first time that there's going to tidal power generation from a tidal farm that is connected to the power grid in the continental United States,” says Banerjee. “We’ve done a lot of testing, and of course the open environment is quite different from simulations or what you see in the lab, but we are very excited.”

The Roosevelt Island Tidal Energy project has a 10-year license, and is slated to generate 1 MW of electricity from the back and forth flows of the East River, a tidal estuary in New York City.

“I have been working on this technology for more than a decade, and I’d really like to see it evolve,” says Banerjee. “Even if we don’t reap the benefits directly, the next generation will. That motivates me as a researcher and as a person.”