By Katie Kackenmeister

By Katie Kackenmeister

Photo by Ryan Hulvat

The next phase of high-performance computing (HPC) at Lehigh recently took flight with the introduction of Hawk, a new research computing cluster established with a $400,000 grant from the National Science Foundation’s Campus Cyberinfrastructure program.

“Developments in HPC have unlocked certain kinds of problems that were previously unaddressable,” says Nathan Urban, provost and senior vice president for academic affairs. “Whether in structural engineering, genomics and health, or physics and cosmology, simulations and data-intensive research are increasingly important to the work taking place at Lehigh.”

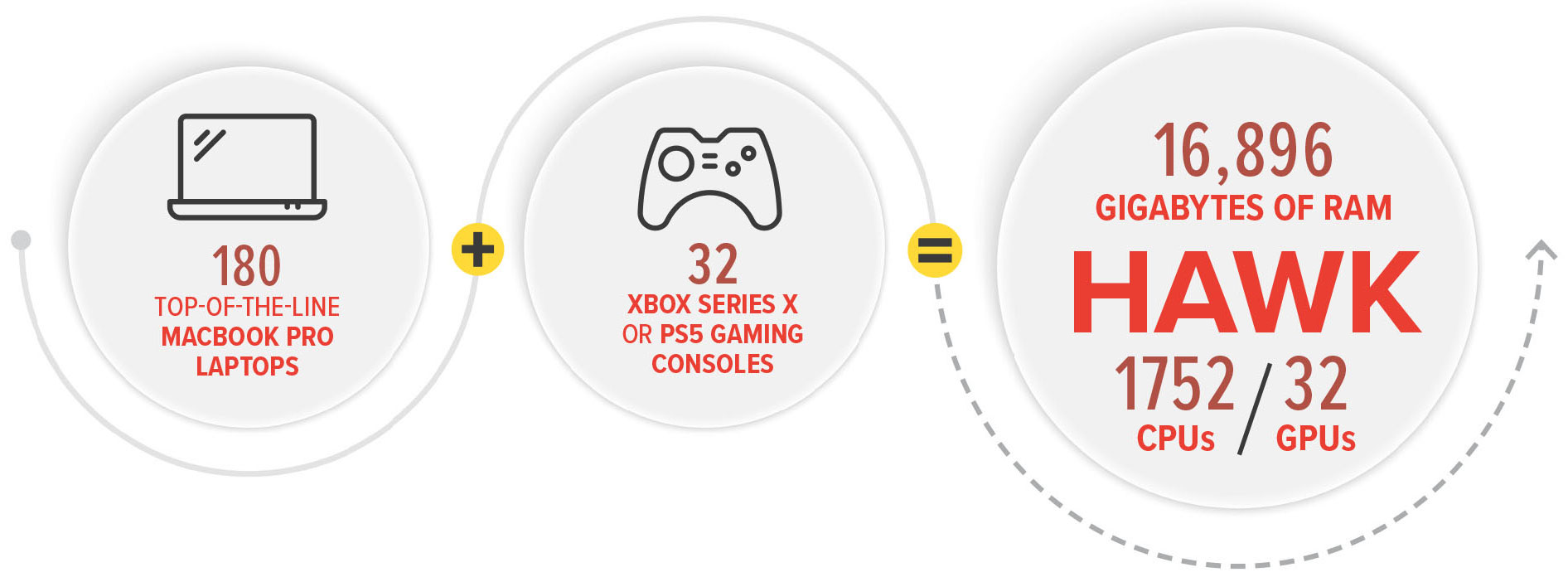

Excitement around the 34-node supercomputer goes beyond its technical specs and capabilities, according to Edmund Webb III, an associate professor of mechanical engineering and mechanics who is leading the project. (Hawk, for the record, includes 1752 CPUs, 32 GPUs, and 16,896 gigabytes of RAM—or the computing power of about 180 top-of-the-line MacBook Pro laptops plus 32 Xbox Series X or PlayStation 5 gaming consoles. View detailed information on Hawk's technical specs on Lehigh's Research Computing website.)

By design, the new cluster is primarily “a leap forward in computing capacity,” says Webb, and its launch is a milestone in widening access to HPC resources for large-scale simulations and other data-heavy operations.

The added capacity will also allow Lehigh to join the Open Science Grid, a national distributed computing partnership for data-intensive research in fields such as high-energy physics, and, recently, in the study of COVID-19.

“The OSG allows institutions to ‘donate’ their idle CPUs,” says Alex Pacheco, Lehigh’s manager of research computing and a collaborator on the grant (along with additional faculty from the Rossin College and Lehigh’s College of Arts and Sciences). “Users submit a job, and depending on availability, the data is sent to systems across the world. Participating in the OSG allows Lehigh to be part of the national and international cyber infrastructure for scientific research.”

Moving from ‘condo’ to ‘complimentary’ computing

Back in 2016, the university provided $150,000 in seed money to establish the existing HPC cluster, Sol. Since then, the hardware has been upgraded incrementally through contributions from individual researchers’ grant awards and startup packages.

The setup, known as a “condo” computing model in the HPC community, was largely win-win: It enabled the university to build centralized research computing infrastructure while providing researchers (aka “condo investors”) with system administration and support from Lehigh’s Library and Technology Services.

Still, explains Webb, the arrangement had one clear drawback: “If you didn’t pay, you couldn’t play—there wasn’t a way for researchers to garner no-cost computing at a level to generate the kind of preliminary data that makes a proposal more competitive for funding.”

Hawk “increases the amount of computing available without requiring them to actually invest in the cluster,” says Pacheco, clearing a barrier to entry and setting the stage for new stakeholders to “buy in” once proposals receive funding. It also provides new opportunities to train graduate students in HPC, he says, and teach undergraduates sought-after skills.

“Hawk will support ongoing collaborations while enabling new ones that require computation to get off the ground,” Webb says, adding that data collected in connection with Hawk (e.g., papers published, proposals granted, graduate students trained) will help in going after larger instrumentation grants in the future.

Breaking boundaries with big data

Stronger research-computing infrastructure bolsters recent hiring efforts in the data science and computational space and aligns with priorities around team research that addresses engineering’s grand challenges, says Stephen P. DeWeerth, dean of the Rossin College.

“It is critically important that Lehigh support state-of-the-art research-computing infrastructure to facilitate the success of our research and researchers,” he says. “For example, all three of Lehigh’s Interdisciplinary Research Institutes (IRIs) rely heavily on HPC to pursue hard problems in areas including intelligent systems, cyber-physical infrastructure, and the design and characterization of novel materials. This infrastructure is also directly aligned with our faculty hiring priorities and is essential to our recruitment of outstanding faculty members.”

One of those researchers is Webb, who uses HPC for molecular simulations as part of a multidisciplinary research team investigating the human blood protein von Willebrand Factor—work that could lead to advances in drug delivery for treating cardiovascular disease.

Yet the potential for collaborations that harness the power of advanced computing isn’t limited to science and engineering, notes Robert Flowers, Herbert J. and Ann L. Siegel Dean of Lehigh’s College of Arts and Sciences.

“There are many opportunities where data intersects with humanities and the social sciences,” he says. “A great deal of the work by our colleagues in these areas now requires computation to solve the complex problems they are studying.”

For instance, Flowers points to Haiyan Jia, an assistant professor of journalism and communications, who is working with computer science and engineering faculty members Brian Davison and Jeffrey Heflin. Their NSF-funded project is investigating new approaches to full-content dataset search that would serve both scientists and journalists in their pursuits.

Faculty members in both colleges are also designing classes and pedagogical approaches that combine data science and social justice topics.

Increasing participation in the HPC community—and using it to generate new knowledge—requires more than just the right hardware, says Greg Reihman, vice provost for Library and Technology Services and director of the Center for Innovation in Teaching and Learning.

“It’s never just the computers,” he says. “It’s the networking underneath the surface, the HVAC system that keeps the data center cool, all the infrastructure involved. And, of course, it comes down to having skilled staff who keep it all running. With the launch of Hawk, we’re celebrating the growth we’ve seen over the past few years in creating these shared resources—and we’re looking ahead to what’s next.”