|

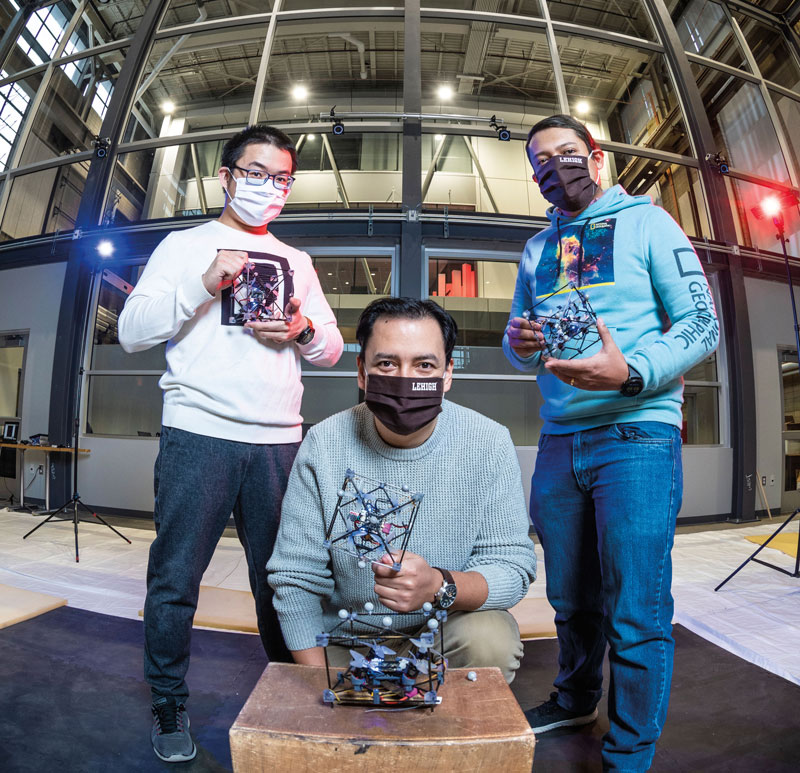

The new AIR Lab facility (top photo) — a towering glass cube in Building C — opens doors to new collaborations among robotics researchers, including Professor Nader Motee (standing), Mechanical Engineering and Mechanics. |

Story by Richard Laliberte

Photography by Ryan Hulvat/Meris

In Building C on Lehigh’s Mountaintop Campus, a new facility has taken shape. With a high ceiling and transparent glass walls, it’s a hive of sorts, enclosed partly as a way to dampen the whir, hum, and drone of robots operating both on the ground and in the air. Welcome to the new, pulsing core of the Autonomous and Intelligent Robotics Laboratory (AIR Lab).

Impressive as the lab looks, its concentration of machines and support infrastructure, such as a state-of-the-art motion capture system, isn’t the lab’s most important feature. Even more valuable is its concentration of brainpower. “The new lab puts researchers together where they’re interacting and leveraging knowledge,” says Jeffrey Trinkle, P.C. Rossin Professor and Chair of the Department of Computer Science and Engineering (CSE). “There’s tremendous potential for collaborative research across a wide variety of areas.”

Among those rubbing elbows in the lab will be groups from CSE, mechanical engineering, electrical engineering, industrial engineering, and other disciplines affiliated with Lehigh’s Institute for Data, Intelligent Systems, and Computation (I-DISC). Together, multiple researchers approaching from different angles will use the lab to attack some of the most vexing problems in a field that’s both inherently interdisciplinary and central to the future of robotics: the science of autonomy.

FROM SENSING TO ACTION

The idea that human-made objects might operate independently, if not think for themselves, has roots in ancient myths and legends, but it began finding modern expression with the origin of the word “robot” in R.U.R. (Rossum’s Universal Robots), a famous 1920 science fiction play by Karel Čapek. It created the mold for every fictional semi-sentient machine you’ve encountered on screens or pages ever since.

|

“Robotics 2.0 is to have robots and humans coexist and even co-work effectively." |

But it’s exponentially easier to imagine an anthropomorphized, mechanical Robby, Rosie, or C-3PO than it is to create an actual machine that can sense its environment, gather data, pick out the most relevant details, infer some form of meaning, come to conclusions, make decisions, form plans, and execute tasks.

Each of those steps is critical to successful autonomy. And each presents challenges interrelated with challenges posed at every other step. “If you had to define ‘the science of autonomy’ in one line, I’d say it’s closing the gap between sensing and action,” explains mechanical engineering and mechanics professor Nader Motee. “Humans do this easily and constantly. The question is: How do we make a robot understand the entire process very quickly, almost in real time?”

It’s a far cry from what robots have done in the past. “Robotics initially evolved to hard automation,” Trinkle says. “Think of the old-style manufacturing assembly lines where you had different stations with a machine made to do one particular job efficiently, accurately, and many, many times over.” People generally were kept apart from working robots partly out of concern that automation could prove dangerous if a human inadvertently got in a machine’s way.

In recent years, research initiatives such as a key program from the Office of Naval Research have sought to nudge robots toward more versatile capabilities. “Robotics 2.0 is to have robots and humans coexist and even co-work effectively without danger to people,” Trinkle says. “The robots become more capable of thinking and doing the right thing at the right time in harmony, and sometimes in collaboration with humans. Robots and people do what they’re best at and can form teams that perform better than either robots or humans could by themselves.”

Self-driving cars and package-delivering drones are two of the most familiar prospective applications of advanced robotic technology. But future uses could far exceed those visions. At Lehigh alone, researchers foresee their work potentially being used in areas as diverse as search and rescue, agriculture, construction, space missions, structural repairs, biotechnology, disaster response, environmental cleanup, surveillance, and even parking-space allocation.

“The goal is to have versatile machines that can accept high-level commands and then do jobs by themselves,” says David Saldaña, an assistant professor of computer science and engineering. “An important part is what we do behind the scenes with mathematical models, algorithms, and programming.”

|

Motee (seated) works with students at the AIR Lab on Mountaintop Campus. |

CONTROLLING COMPUTATION

At the front end of the sensing-to-action progression, robots need to perceive their environment. “That’s a challenge right there,” Motee says. “How will they process all that information?” Streaming images from an onboard high-frame-rate camera might provide up to 1,000 frames per second from a single robot, each frame packing four or five megabytes of data. One second later comes another data dump potentially as big as 5,000 megabytes. In multi-agent networks of robots such as drone swarms, this information might be passed between individual units. “If they’re sharing raw information from each frame, that’s extremely costly in terms of processing,” Motee says. “We need preprocessing of only relevant information.”

Motee is developing such data-parsing capabilities in a map classification project. Suppose, he says, a group of drones is dropped into an unknown environment—say, one of 20 possible college campuses—and it’s up to the robots to figure out where they are. Motee is working on methods for each drone in a distributed network to focus only on features and landmarks relevant to whether they’re at Lehigh, Princeton, or somewhere else and to share that information optimally through the network.

Similar parsing could be used with a robot faced with a task that any human would find easy: getting up from a sofa and walking into the kitchen. A coffee table between the sofa and door would be mission-relevant because the robot must navigate the obstacle. “But it doesn’t matter if there’s a laptop on the other side of the room, because it has nothing to do with the mission,” Motee says. “The goal is mission-aware feature extraction.”

One way to extract relevant features is to employ machine learning based on pattern recognition. If you step in front of a self-driving car, its system must conclude that what it “sees” is human before making decisions, forming plans, and taking action—e.g., slamming on the brakes. “Modeling the shape of a human and how one moves based on physics is extremely hard,” Motee says. “So we don’t write an equation for the dynamics of the human body. We train the machine with lots of samples from the human body and it learns what a human looks like without caring too much about exact parameters.” The robot doesn’t need a precise description of every person alive. It can extrapolate learned patterns to objects it hasn’t seen before and recognize that you belong to the same group as a man using a walker or a child chasing a ball.

|

Assistant Professor Christian-Ioan Vasile (center), Mechanical Engineering and Mechanics, speaks with his students in Building C on Mountaintop Campus. |

Other tools that can help robots make decisions, plan actions, and work with other robots are based on logic that also captures events over time—temporal logic. Missions and rules described in temporal logic are automatically transformed into plans for the robots to follow, and are guaranteed to be safe and correct by construction. These techniques belong to the engineering field of “formal methods.”

“Using temporal logic, we can say something like, ‘within a given time, a robot will arrive at Site A to extract a sample, and at Site B to extract a different sample, and upload them at Site C, while following safety properties such as avoiding a hazardous area,’” says Cristian-Ioan Vasile, an assistant professor of mechanical engineering and mechanics, who investigates the use of formal methods in autonomy. Such high-level instructions generally proceed in a certain logical order, though specific combinations of tasks and timelines may provide options.

Motee sees potential for both temporal logic and machine learning to guide computation in his example of a robot heading to the kitchen. If the robot had previously observed you making an omelet, “it could learn the sequence of actions you took in a specific order,” Motee says. Get eggs. Heat pan. Break shells. Classical logic built on true/false statements might tell the robot that, true, each of those steps is necessary for an omelet. But the robot also needs to understand through temporal logic that each step must be true at a certain point in time relative to other steps. Otherwise, you could have a mess instead of a meal.

“Can a robot learn these skills and express them as statements in logic? That’s still a very big open question,” Motee says. “But if we want robots to merge with society, they need to learn about human behavior—what actions we take and in what order.”

DIVERSE APPROACHES

As robots merge with society, they’ll also increasingly engage with other robots. “As we add robots, we need to plan for them,” Vasile says. “It becomes a question of scalability, which is a large issue.” Computational methods developed in recent years may handle interactions between handfuls of robots. “But if we want to plan for 20, 50, or 100 robots, the methods break down because there’s an exponential growth in the computation needed,” he says. “There’s a mathematical reason for this and it’s very hard to solve.” One approach to solutions: “In our recent work, we use structure to simplify some of these problems,” Vasile says.

|

The AIR Lab brings together faculty, including Assistant Professor Subhrajit Bhattacharya, Mechanical Engineering and Mechanics, and students tackling robotics challenges from varied perspectives. |

Structure can refer to a robot’s circumstances or environment. Rules of the road for a self-driving car have a particular structure, Vasile says, but not every rule applies to every situation. “We leverage that structure to mitigate the rules tracked at one time in order to manage computation,” he says. A set of rules such as the Vienna Convention on Road Traffic, used throughout Europe and elsewhere, might have about 130 rules, “but maybe only 10 of them apply to a car at a four-way stop,” Vasile says. “That can simplify planning.”

Yet some robots will need to operate in environments less structured than the open road—such as the open sea. Planning for motions in complex environments can quickly outstrip computational capacity, creating a need for abstractions that can help manage the computational load. Such abstractions can be found in topology, a branch of mathematics that analyzes the shape of configuration spaces.

“We try to understand configuration spaces in terms of their shape,” says Subhrajit Bhattacharya, an assistant professor of mechanical engineering and mechanics. “This helps us abstract details of a high-degree-of-freedom system into more manageable abstractions that reduce the amount of computation necessary and also allows us to solve problems correctly.”

Some of Bhattacharya’s research uses topological approaches with application to motion planning for robots attached to some form of cable. Examples might include autonomous watercraft tethered to flexible booms that maneuver in tandem while avoiding collisions or entanglements with each other or various obstacles—potentially useful for cleaning oil spills at sea. Other possibilities might include self-operating vacuum cleaners with hoses attached to walls or cable-actuated factory robots. “It’s difficult to plan accurately for cables or control them,” Bhattacharya says. “But if you apply topological abstractions, you can simplify problems by thinking of topological classes such as moving to the left or right of an obstacle.”

Topology also can help plan actions for systems with high dimensional configuration spaces such as the movement of a multi-segmented robot arm or—in the case of a project Bhattacharya is working on with Lehigh alumnus Matthew Bilsky ’12 ’14G ’17 PhD, founder of a start-up called FLX Solutions—a snake-like robot that could navigate and brace itself inside walls or other hard-to-reach spaces to perform repairs or conduct inspections.

Bhattacharya points to Vasile’s work as an example of different perspectives addressing similar problems. “Cristian focuses on formal methods while I focus more on understanding the topology and geometry of space,” he says. “But we can put together our approaches to solve problems more efficiently.”

|

Saldaña (center) and his students will use the new facility for their work on multi-robot systems, resilient robot swarms, and modular aerial robotics. |

TEAMS OF MACHINES

Ultimately, the sensing-to-action model of autonomy comes down to robots actually executing their missions. But sensing, planning, and control are just as crucial on the business end of robotics as earlier in the autonomy process. That’s especially true for robots working together. “We want to build algorithms and controllers that allow robots to autonomously cooperate and behave as a unit even though they’re independent,” Saldaña says.

He sees teamwork as a key to the versatility Robotics 2.0 demands. Consider the prospect of drone delivery. “If you want to transport a T-shirt, a small robot is enough,” Saldaña says. “If you want to transport a sofa, you need a very large robot.” But instead of vast lineups of different-sized robots, he envisions drones that self-assemble in midair to form whatever configuration or size a task demands.

Potential applications go far beyond shipping your latest Amazon purchase. Imagine a high-rise fire in which stairwells have collapsed and people are trapped on floors above the flames far beyond the reach of ground-based rescuers. As the inferno rages, hundreds of drones appear like a whining flock of starlings. They split into groups, and individual robots in each formation dock together to form different structures. One group creates a fire-escape staircase on which people can clamber to a safer level. Another builds a bridge they can cross to an adjacent building. A third creates a hovering platform that ferries evacuees from windows to the ground.

Units forming in such multi-agent flying systems would, in effect, have to do what most robots are expressly instructed to avoid: collide with each other. Doing so in the gentlest, most controlled way possible poses considerable challenges, but Saldaña already has built aerial vehicles that can magnetically dock and pull apart in flight.

“It starts with modeling the system, which requires a lot of mathematics and physics,” Saldaña says. Docking and undocking must consider a variety of dynamic forces including those from drone propellers’ linear and rotational accelerations. “From physics and dynamics, we go to control theory—that is, a mathematical model of how a robot should behave and move—to design algorithms that we put into programming languages like C++ or Python,” Saldaña says. Sensors in the robots monitor their locations and allow drones to quickly compensate for them. “The robots need to be very precise when they approach each other but also very aggressive for specific amounts of time,” Saldaña says. To detach in midair, drones reach an angle of 40 degrees in less than two seconds, like a pencil snapping in two. “That is not something they would normally do,” Saldaña says. The drones then rapidly stabilize.

Saldaña is now developing bigger, more powerful robots and increasing their capabilities. For example, he’s used a configuration of four attached drones that rotate their corners apart to create a donut-like center space capable of closing around a coffee cup and lifting it from a stand to a trash can.

Being able to grasp objects opens possibilities for robots—flying or otherwise—to manipulate their environment and accomplish tasks such as repairs—a line of research in which Trinkle specializes. “If there’s a cell phone on a counter and a robot wants to pick it up, it needs to touch the cell purposefully and safely without breaking itself, the counter, or the phone,” Trinkle says. “It may need to slide the phone to the edge of the counter and pinch the part that’s hanging over. Those kinds of contact changes where you’re touching or not touching something require a human-like deftness that’s tricky for a robot.”

Trinkle and a PhD student are tackling these challenges through machine learning and instruments such as tactile sensors. “We’re developing capabilities that don’t currently exist because researchers haven’t applied these tools specifically to solve these kinds of problems,” Trinkle says.

Further challenges lie in what may be described as human-robot relations. Vasile describes one area as “the explainability of AI”—that is, the ability of artificially intelligent robots to offer people comprehensible reasons for the decisions robots make. “This is very new and we don’t know how to do it very well, but it will be important to any applications,” Vasile says.

Another area pertains to a kind of artificial emotional intelligence. For example, a housekeeping robot might best avoid turning on when cleaning would irritate homeowners, or a workplace robot teamed with a human might be more effective if it realizes that its flesh-and-blood partner is in a bad mood or not ready. “That part of robotic perception is a step beyond what a lot of people think about,” Trinkle says.

Such considerations further broaden the interdisciplinary nature of autonomous robotics. “Challenges dealing with emotion may entail collaboration with psychologists,” Trinkle says. “In the nearer term, there’s a lot more collaboration in store between different forms of engineering and computer science.”

The new AIR Lab facility promises to accelerate multidisciplinary interactions between faculty and students alike. “It’s not enough to study programming, mechanics, or mathematics separately,” Saldaña says. “Real-world robotics needs to combine all these fields to propose solutions to problems. Having a large space to deploy many robots will allow us to develop novel platforms to explore our theories and perform realistic experiments.”

Illustration Credits: DrAfter123/istock; Chanut Iamnoy/iStock; Aha-Soft/Shutterstock

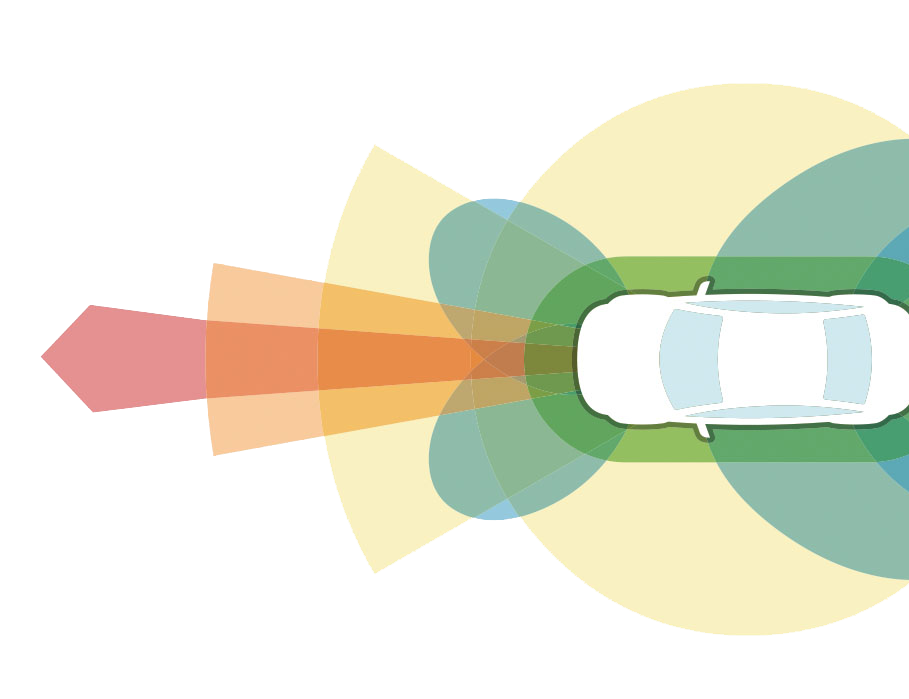

Fed into an algorithm, LiDAR data—which is more manageable in size and requires less computation than what’s captured by RGB cameras—allows the vehicle to accurately perceive its surroundings and navigate a clear, safe path.

Fed into an algorithm, LiDAR data—which is more manageable in size and requires less computation than what’s captured by RGB cameras—allows the vehicle to accurately perceive its surroundings and navigate a clear, safe path.

Listen In

Listen In