Our brains are masters of efficiency.

Our brains are masters of efficiency.

“Biology is very energy optimized,” says Yevgeny Berdichevsky (pictured), an associate professor of bioengineering and electrical and computer engineering. “The amount of energy the brain uses at any given time is roughly equal to a light bulb in terms of wattage. Replicating those computations in hardware would demand orders of magnitude more power.”

Berdichevsky and his collaborators were recently awarded a $2 million grant from the National Science Foundation to explore the complex information processing that occurs in the brain and harness it to make artificial intelligence more powerful and energy efficient.

The funding comes from NSF’s Emerging Frontiers in Research and Innovation (EFRI) program, which supports research into using biological substrates (what Berdichevsky calls “wetware”) to replicate the countless computations our brains perform—such as processing sensory input to create a picture of the world and directing our muscles to act on it.

“People have long built hardwarebased neural networks to mimic the human brain,” says Berdichevsky. “But real brain circuits perform complex tasks that hardware still can’t. We want to identify those computations to inspire the next generation of AI algorithms—improving not only their efficiency, but also their capacity to process information.”

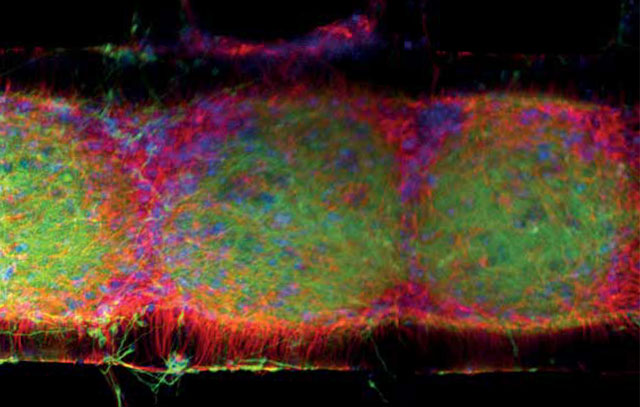

The team will study neurons within a brain organoid, a millimeter-sized, three-dimensional structure grown in the lab from adult stem cells that issimilar to a developing brain. Their firstchallenge is to organize the neurons to resemble the human cortex.

“In organoids, neurons connect randomly,” says Berdichevsky. “In our brains, they’re highly ordered—and we need that control for computation.”

To solve this, Lesley Chow (pictured), an associate professor of bioengineering and materials science and engineering, will fabricate 3D-printed biomaterial scaffolds that guide neuron placement.

To solve this, Lesley Chow (pictured), an associate professor of bioengineering and materials science and engineering, will fabricate 3D-printed biomaterial scaffolds that guide neuron placement.

“We’ve learned that we can insert neural spheroids—clusters of different neural types—into scaffold sockets, stack the layers, and essentially engineer the whole organoid from the bottom up,” he explains.

Next, they will test whether the neurons can perform dynamic computations, such as interpreting moving images. Drones and autonomous vehicles rely on an “optical flow” algorithm within computer vision software to track motion. But, says Berdichevsky, the software is less effective than hoped.

“My goal is to use the complex dynamics of cortical neurons to do this better and with less energy,” he says.

The team will adapt methods from earlier studies where they stimulated neurons with light. They encoded an image into a sequence of optical pulses, then directed the pulses to specific neurons, allowing the cells to “see” the images.

“It’s not so different from the way it works in our brain,” he says. “Our eyes essentially transform optical information into electrical information that then travels to the neurons in the cortex. Here, we bypass the eye and stimulate the neurons directly.”

Once stimulated, input neurons can relay information to output neurons, and the team can measure neural activity using a microscope. “Based on this previous work, we know we can get information into the network. The next step is to have the network do something useful with it, which is the purpose of this new project.”

The researchers plan to implement a biological version of the optical flow algorithm by playing a movie of nature scenes through optical pulses to determine if the network detects motion.

“We’ll be expressing a gene in these neurons that turns into a fluorescent protein,” he says. “The protein increases its fluorescence when the neuron is active, and decreases when it’s not. We can then take snapshots of which neurons are active and which are not."

Yuntao Liu, an assistant professor of electrical and computer engineering, and Berdichevsky will then develop a decoding algorithm and a computer model to interpret these patterns. By analyzing which neurons light up, the algorithm should reveal not only what the network is perceiving, but also the speed and direction of moving objects. The computer model will help the team design learning and training protocols for the engineered organoid.

Berdichevsky and his team hope to develop a proof of concept that engineered organoids can support biological computation and to use those findings to inspire more efficient and more powerful artificial neural networks.

Ally Peabody Smith, an assistant professor in Lehigh’s College of Health, will explore the ethical, social, and legal implications of utilizing brain organoids. “We don’t expect these organoids to be conscious—they’re far too small and simple,” Berdichevsky says. “But we recognize the ethical concerns and want to demonstrate that our work stays well below any threshold of consciousness.”